环境 硬件环境

CPU

内存

硬盘

软件环境

操作系统

Ceph软件版本

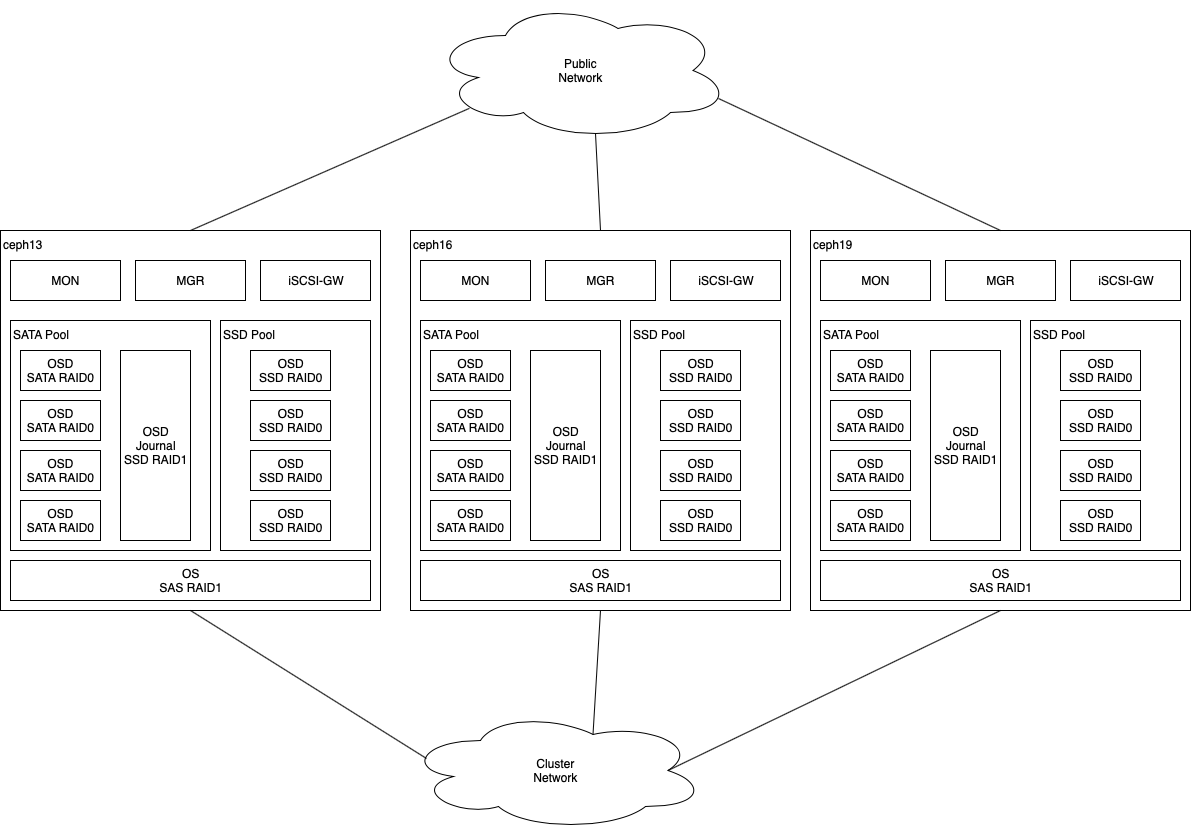

部署方案

OS 两个SAS盘(300G)做成RAID1,用于存储操作系统。

SATA Pool

OSD Data

OSD Journal

ObjectStore

Replication

SSD Pool

OSD

ObjectStore

Replication

部署步骤 Ceph集群部署 Ceph集群的节点分为管理节点、MON节点、OSD节点

节点

用途

MON节点

用于监控集群、为客户端提供ClusterMap等服务

OSD节点

数据存储节点,用于完成数据的持久化工作

ADMIN节点

集群维护管理节点,提供管理员权限

MDS节点

提供Cephfs服务

RGW节点

提供对象存储服务

MGR节点

提供监控(zabbix、prometheus等)、dashboard等服务

iSCSI-GW节点

提供iSCSI Target 服务

更多Ceph内容,请见此链接

需要在部署集群前,做通管理节点与集群节点(包括:MON节点、OSD节点)之间的ssh无密码访问。

将各个节点的hostname和ip地址对应后,写入/etc/hosts文件,并将此文件同步到各个节点上1 2 3 4 5 6 7 [root@ceph13 cluster]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.53.2.13 ceph13 ceph13 10.53.2.16 ceph16 ceph16 10.53.2.19 ceph19 ceph19

Ceph-deploy 安装 在管理节点上安装Ceph-deploy工具。

安装EPEL

1 yum install -y epel-release

修改/增加ceph.repo,并安装ceph-deploy

1 2 3 4 5 6 7 [ceph-noarch] name=Ceph noarch packages baseurl=https://download.ceph.com/rpm-nautilus/el7/noarch enabled=1 gpgcheck=1 type=rpm-md gpgkey=https://download.ceph.com/keys/release.asc

1 2 yum update -y yum install -y ceph-deploy

Create the cluster 1 2 3 mkdir {my-cluster} cd {my-cluster} ceph-deploy new {initial-monitor-node(s)}

eg:

1 2 3 [root@ceph13 ~]# mkdir cluster [root@ceph13 ~]# cd cluster [root@ceph13 cluster]# ceph-deploy new ceph13 ceph16 ceph19

修改集群初始化配置 修改{my-cluster}目录中的ceph.conf

1 2 3 4 5 6 7 8 9 10 11 12 [root@ceph13 cluster]# ls -al 总用量 600 drwxr-xr-x. 2 root root 244 8月 21 15:51 . dr-xr-x---. 8 root root 4096 8月 11 16:27 .. -rw-------. 1 root root 113 8月 10 16:17 ceph.bootstrap-mds.keyring -rw-------. 1 root root 113 8月 10 16:17 ceph.bootstrap-mgr.keyring -rw-------. 1 root root 113 8月 10 16:17 ceph.bootstrap-osd.keyring -rw-------. 1 root root 113 8月 10 16:17 ceph.bootstrap-rgw.keyring -rw-------. 1 root root 151 8月 10 16:17 ceph.client.admin.keyring -rw-r--r--. 1 root root 293 8月 10 15:15 ceph.conf -rw-r--r--. 1 root root 580431 8月 10 17:13 ceph-deploy-ceph.log -rw-------. 1 root root 73 8月 10 15:12 ceph.mon.keyring

配置public、cluster网络

配置osd rebalance

配置scrub和deep-scrub

关闭crushmap 启动更新配置1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [global] public network = {ip-address}/{bits} cluster network = {ip-address}/{bits} osd crush update on start = false [osd] osd max backfills = 1 osd recovery op priority = 1 osd recovery max active = 1 osd client op priority = 63 osd recovery delay start = 0.5 osd scrub chunk min = 1 osd scrub chunk max = 5 osd scrub sleep = 5 osd deep scrub interval = 2592000 # 60(秒)*60(分)*24(时)*30(天)

eg:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 [root@ceph13 cluster]# cat /etc/ceph/ceph.conf [global] fsid = 20192dd5-3228-4135-9831-c9d7de74a890 mon_initial_members = ceph13, ceph16, ceph19 mon_host = 10.53.2.13,10.53.2.16,10.53.2.19 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx osd crush update on start = false public network = 10.53.2.0/24 cluster network = 10.53.1.0/24 [osd] osd max backfills = 1 osd recovery op priority = 1 osd recovery max active = 1 osd client op priority = 63 osd recovery delay start = 0.5 osd scrub chunk min = 1 osd scrub chunk max = 5 osd scrub sleep = 5 osd deep scrub interval = 2592000

ceph软件安装 1 ceph-deploy install --stable nautilus {ceph-node(s)}

eg:

1 ceph-deploy install --stable nautilus ceph13 ceph16 ceph19

*** 此处安装过程比较慢,失败的话建议重试几次。***

MON节点创建初始化 1 ceph-deploy mon create-initial

此命令会根据ceph.conf进行MON节点的创建及初始化工作

部署管理节点 1 ceph-deploy admin {ceph-node(s)}

eg:

1 ceph-deploy admin ceph13 ceph16 ceph19

部署mgr节点(可选) 1 ceph-deploy mgr create {ceph-node(s)}

eg:

1 ceph-deploy mgr create ceph13 ceph16 ceph19

*** 若不部署mgr服务,集群会有告警,但不影响使用。***

部署OSD节点(Filestore) 1 ceph-deploy osd create --filestore --data {device path} --journal {device path} {ceph-node}

--filestore--data--journalceph-node

eg:

1 2 3 4 5 6 7 8 9 10 11 12 13 ceph-deploy osd create ceph13 --filestore --data /dev/sdb --journal /dev/sdf1 ceph-deploy osd create ceph13 --filestore --data /dev/sdb --journal /dev/sdf1 ceph-deploy osd create ceph13 --filestore --data /dev/sdc --journal /dev/sdf2 ceph-deploy osd create ceph13 --filestore --data /dev/sdd --journal /dev/sdf3 ceph-deploy osd create ceph13 --filestore --data /dev/sde --journal /dev/sdf4 ceph-deploy osd create ceph16 --filestore --data /dev/sdb --journal /dev/sdf1 ceph-deploy osd create ceph16 --filestore --data /dev/sdc --journal /dev/sdf2 ceph-deploy osd create ceph16 --filestore --data /dev/sdd --journal /dev/sdf3 ceph-deploy osd create ceph16 --filestore --data /dev/sde --journal /dev/sdf4 ceph-deploy osd create ceph19 --filestore --data /dev/sdb --journal /dev/sdf1 ceph-deploy osd create ceph19 --filestore --data /dev/sdc --journal /dev/sdf2 ceph-deploy osd create ceph19 --filestore --data /dev/sdd --journal /dev/sdf3 ceph-deploy osd create ceph19 --filestore --data /dev/sde --journal /dev/sdf4

部署OSD节点(Bluestore) 1 ceph-deploy osd create --data {device path} {ceph-node}

--dataceph-node

eg:

1 2 3 4 5 6 7 8 9 10 11 12 ceph-deploy osd create ceph13 --data /dev/sdg ceph-deploy osd create ceph13 --data /dev/sdh ceph-deploy osd create ceph13 --data /dev/sdi ceph-deploy osd create ceph13 --data /dev/sdj ceph-deploy osd create ceph16 --data /dev/sdg ceph-deploy osd create ceph16 --data /dev/sdh ceph-deploy osd create ceph16 --data /dev/sdi ceph-deploy osd create ceph16 --data /dev/sdj ceph-deploy osd create ceph19 --data /dev/sdg ceph-deploy osd create ceph19 --data /dev/sdh ceph-deploy osd create ceph19 --data /dev/sdi ceph-deploy osd create ceph19 --data /dev/sdj

部署完所有OSD节点后,此集群部署完成可以通过ceph -s查看集群状态。

eg:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 [root@ceph13 cluster]# ceph -s cluster: id: 20192dd5-3228-4135-9831-c9d7de74a890 health: HEALTH_OK services: mon: 3 daemons, quorum ceph13,ceph16,ceph19 (age 10d) mgr: ceph13(active, since 10d), standbys: ceph16, ceph19 osd: 24 osds: 24 up (since 9d), 24 in (since 10d) data: pools: 3 pools, 1536 pgs objects: 20.80k objects, 81 GiB usage: 244 GiB used, 70 TiB / 71 TiB avail pgs: 1536 active+clean io: client: 309 KiB/s rd, 145 KiB/s wr, 41 op/s rd, 12 op/s wr [root@ceph13 cluster]# ceph osd tree ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF -15 5.21997 root ssd -9 1.73999 host ceph13_ssd 12 ssd 0.43500 osd.12 up 1.00000 1.00000 13 ssd 0.43500 osd.13 up 1.00000 1.00000 14 ssd 0.43500 osd.14 up 1.00000 1.00000 15 ssd 0.43500 osd.15 up 1.00000 1.00000 -11 1.73999 host ceph16_ssd 16 ssd 0.43500 osd.16 up 1.00000 1.00000 17 ssd 0.43500 osd.17 up 1.00000 1.00000 18 ssd 0.43500 osd.18 up 1.00000 1.00000 19 ssd 0.43500 osd.19 up 1.00000 1.00000 -13 1.73999 host ceph19_ssd 20 ssd 0.43500 osd.20 up 1.00000 1.00000 21 ssd 0.43500 osd.21 up 1.00000 1.00000 22 ssd 0.43500 osd.22 up 1.00000 1.00000 23 ssd 0.43500 osd.23 up 1.00000 1.00000 -1 65.41200 root default -3 21.80400 host ceph13_sata 0 hdd 5.45099 osd.0 up 1.00000 1.00000 1 hdd 5.45099 osd.1 up 1.00000 1.00000 2 hdd 5.45099 osd.2 up 1.00000 1.00000 3 hdd 5.45099 osd.3 up 1.00000 1.00000 -5 21.80400 host ceph16_sata 4 hdd 5.45099 osd.4 up 1.00000 1.00000 5 hdd 5.45099 osd.5 up 1.00000 1.00000 6 hdd 5.45099 osd.6 up 1.00000 1.00000 7 hdd 5.45099 osd.7 up 1.00000 1.00000 -7 21.80400 host ceph19_sata 8 hdd 5.45099 osd.8 up 1.00000 1.00000 9 hdd 5.45099 osd.9 up 1.00000 1.00000 10 hdd 5.45099 osd.10 up 1.00000 1.00000 11 hdd 5.45099 osd.11 up 1.00000 1.00000

iscsi-gw部署 依赖软件安装 iscsi-gw软件在192.168.1.70的/home/zhoub/rpms目录下。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 [zhoub@CentOS ceph-iscsi-rpms]$ ls -al 总用量 588 drwxr-xr-x. 9 zhoub zhoub 4096 6月 19 16:31 . drwxrwxr-x. 3 zhoub zhoub 53 8月 8 13:42 .. drwxrwxr-x. 10 zhoub zhoub 4096 6月 19 16:10 ceph-iscsi drwxrwxr-x. 9 zhoub zhoub 213 6月 19 16:10 configshell-fb -rw-r--r--. 1 root root 284340 8月 11 2017 libnl3-3.2.28-4.el7.x86_64.rpm drwxrwxr-x. 2 zhoub zhoub 240 6月 19 15:51 pip drwxr-xr-x. 2 zhoub zhoub 4096 6月 19 16:10 pip_packages -rw-r--r--. 1 root root 137912 11月 12 2018 pyOpenSSL-0.13.1-4.el7.x86_64.rpm -rw-r--r--. 1 root root 96088 7月 4 2014 pyparsing-1.5.6-9.el7.noarch.rpm -rw-r--r--. 1 root root 58008 7月 4 2014 python-kmod-0.9-4.el7.x86_64.rpm -rw-r--r--. 1 zhoub zhoub 76 6月 19 16:10 requirement.txt drwxrwxr-x. 11 zhoub zhoub 223 6月 19 16:10 rtslib-fb drwxrwxr-x. 10 zhoub zhoub 248 6月 19 16:10 targetcli-fb drwxr-xr-x. 2 root root 112 6月 19 16:28 tcmu-runner

pip安装 在rpms/ceph-iscsi-rpms/pip目录下。

pip 安装依赖的软件

1 2 3 4 5 6 7 8 9 [zhoub@CentOS pip]$ ls -al 总用量 4080 drwxrwxr-x. 2 zhoub zhoub 240 6月 19 15:51 . drwxr-xr-x. 9 zhoub zhoub 4096 6月 19 16:31 .. -rw-rw-r--. 1 zhoub zhoub 3700178 6月 19 15:31 pip_19.1.1.tar.gz -rw-r--r--. 1 root root 5932 3月 14 2015 python-backports-1.0-8.el7.x86_64.rpm -rw-r--r--. 1 root root 12896 4月 25 2018 python-backports-ssl_match_hostname-3.5.0.1-1.el7.noarch.rpm -rw-r--r--. 1 root root 35176 11月 21 2016 python-ipaddress-1.0.16-2.el7.noarch.rpm -rw-r--r--. 1 root root 406404 8月 11 2017 python-setuptools-0.9.8-7.el7.noarch.rpm

安装pip及其依赖的软件

1 2 3 4 5 cd pip yum localinstall *.rpm tar -xzvf ./pip_19.1.1.tar.gz cd pip_19.1.1 python setup.py install

安装pip package 在线安装使用rpms/ceph-iscsi-rpms/requirement.txt文件进行安装

1 2 3 4 5 6 7 8 9 [zhoub@CentOS ceph-iscsi-rpms]$ cat requirement.txt pyudev> =0.16.1 Flask requests crypto netifaces urwid pyparsing cryptography

1 pip install -r ./requirement.txt

离线安装包在rpms/ceph-iscsi-rpms/pip_packages中

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 [zhoub@CentOS pip_packages]$ ls -al 总用量 5828 drwxr-xr-x. 2 zhoub zhoub 4096 6月 19 16:10 . drwxr-xr-x. 9 zhoub zhoub 4096 6月 19 16:31 .. -rw-r--r--. 1 zhoub zhoub 101571 6月 19 16:10 asn1crypto-0.24.0-py2.py3-none-any.whl -rw-r--r--. 1 zhoub zhoub 157119 6月 19 16:10 certifi-2019.6.16-py2.py3-none-any.whl -rw-r--r--. 1 zhoub zhoub 415091 6月 19 16:10 cffi-1.12.3-cp27-cp27mu-manylinux1_x86_64.whl -rw-r--r--. 1 zhoub zhoub 133356 6月 19 16:10 chardet-3.0.4-py2.py3-none-any.whl -rw-r--r--. 1 zhoub zhoub 81299 6月 19 16:10 Click-7.0-py2.py3-none-any.whl -rw-r--r--. 1 zhoub zhoub 18019 6月 19 16:10 crypto-1.4.1-py2.py3-none-any.whl -rw-r--r--. 1 zhoub zhoub 2288455 6月 19 16:10 cryptography-2.7-cp27-cp27mu-manylinux1_x86_64.whl -rw-r--r--. 1 zhoub zhoub 12427 6月 19 16:10 enum34-1.1.6-py2-none-any.whl -rw-r--r--. 1 zhoub zhoub 92053 6月 19 16:10 Flask-1.0.3-py2.py3-none-any.whl -rw-r--r--. 1 zhoub zhoub 58594 6月 19 16:10 idna-2.8-py2.py3-none-any.whl -rw-r--r--. 1 zhoub zhoub 18155 6月 19 16:10 ipaddress-1.0.22-py2.py3-none-any.whl -rw-r--r--. 1 zhoub zhoub 16743 6月 19 16:10 itsdangerous-1.1.0-py2.py3-none-any.whl -rw-r--r--. 1 zhoub zhoub 124883 6月 19 16:10 Jinja2-2.10.1-py2.py3-none-any.whl -rw-r--r--. 1 zhoub zhoub 24348 6月 19 16:10 MarkupSafe-1.1.1-cp27-cp27mu-manylinux1_x86_64.whl -rw-r--r--. 1 zhoub zhoub 590559 6月 19 16:10 Naked-0.1.31-py2.py3-none-any.whl -rw-r--r--. 1 zhoub zhoub 31249 6月 19 16:10 netifaces-0.10.9-cp27-cp27mu-manylinux1_x86_64.whl -rw-r--r--. 1 zhoub zhoub 158295 6月 19 16:10 pycparser-2.19.tar.gz -rw-r--r--. 1 zhoub zhoub 62288 6月 19 16:10 pyparsing-2.4.0-py2.py3-none-any.whl -rw-r--r--. 1 zhoub zhoub 89469 6月 19 16:10 pyudev-0.21.0.tar.gz -rw-r--r--. 1 zhoub zhoub 274442 6月 19 16:10 PyYAML-5.1.1.tar.gz -rw-r--r--. 1 zhoub zhoub 57952 6月 19 16:10 requests-2.22.0-py2.py3-none-any.whl -rw-r--r--. 1 zhoub zhoub 4933 6月 19 16:10 shellescape-3.4.1-py2.py3-none-any.whl -rw-r--r--. 1 zhoub zhoub 10586 6月 19 16:10 six-1.12.0-py2.py3-none-any.whl -rw-r--r--. 1 zhoub zhoub 150942 6月 19 16:10 urllib3-1.25.3-py2.py3-none-any.whl -rw-r--r--. 1 zhoub zhoub 604167 6月 19 16:10 urwid-2.0.1.tar.gz -rw-r--r--. 1 zhoub zhoub 327611 6月 19 16:10 Werkzeug-0.15.4-py2.py3-none-any.whl

安装依赖rpms rpm包在rpms/ceph-iscsi-rpms/目录下。

1 2 3 4 yum localinstall libnl3-3.2.28-4.el7.x86_64.rpm yum localinstall pyOpenSSL-0.13.1-4.el7.x86_64.rpm yum localinstall pyparsing-1.5.6-9.el7.noarch.rpm yum localinstall python-kmod-0.9-4.el7.x86_64.rpm

安装TCMU-RUNNER rpm包在rpms/ceph-iscsi-rpms/tcmu-runner目录下。

1 2 cd tcmu-runner yum localinstall *.rpm

启动tcmu-runner服务

1 2 3 systemctl daemon-reload systemctl enable tcmu-runner systemctl start tcmu-runner

源码地址:https://github.com/open-iscsi/tcmu-runner

安装RTSLIB-FB 1 2 cd rtslib-fb python setup.py install

源码地址:https://github.com/open-iscsi/rtslib-fb

安装CONFIGSHELL-FB 1 2 cd configshell-fb python setup.py install

源码地址:https://github.com/open-iscsi/configshell-fb

安装TARGETCLI-FB 1 2 3 4 cd targetcli-fb python setup.py install mkdir /etc/target mkdir /var/target

源码地址:https://github.com/open-iscsi/targetcli-fb

安装CEPH-ISCSI 1 2 3 4 cd ceph-iscsi python setup.py install --install-scripts=/usr/bin cp ./usr/lib/systemd/system/rbd-target-gw.service /lib/systemd/system/ cp ./usr/lib/systemd/system/rbd-target-api.service /lib/systemd/system/

启动rbd-target-gw和rbd-target-api服务

1 2 3 4 5 systemctl daemon-reload systemctl enable rbd-target-gw systemctl enable rbd-target-api systemctl start rbd-target-gw systemctl start rbd-target-api

源码地址:https://github.com/ceph/ceph-iscsi

配置iscsi-gateway.cfg 创建一个/etc/ceph/iscsi-gateway.cfg文件,在文件中增加如下内容

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 [config] # Name of the Ceph storage cluster. A suitable Ceph configuration file allowing # access to the Ceph storage cluster from the gateway node is required, if not # colocated on an OSD node. cluster_name = ceph # Place a copy of the ceph cluster's admin keyring in the gateway's /etc/ceph # drectory and reference the filename here gateway_keyring = ceph.client.admin.keyring # API settings. # The API supports a number of options that allow you to tailor it to your # local environment. If you want to run the API under https, you will need to # create cert/key files that are compatible for each iSCSI gateway node, that is # not locked to a specific node. SSL cert and key files *must* be called # 'iscsi-gateway.crt' and 'iscsi-gateway.key' and placed in the '/etc/ceph/' directory # on *each* gateway node. With the SSL files in place, you can use 'api_secure = true' # to switch to https mode. # To support the API, the bear minimum settings are: api_secure = false # Additional API configuration options are as follows, defaults shown. # api_user = admin # api_password = admin # api_port = 5001 # iscsi-gw 最少需要部署两个服务 trusted_ip_list = 10.53.2.13,10.53.2.16,10.53.2.19

重启rbd-target-api服务

1 2 3 systemctl daemon-reload systemctl stop rbd-target-api systemctl start rbd-target-api

*** rbd-target-api 需要依赖 rbd pool,若集群中没有rbd pool ,需要手动创建。 ***

配置iscsi-target 在iscsi-gw服务所在节点上输入gwcli进入gw交互模式

创建target iqn eg:

1 2 /> cd /iscsi-target/iscsi-target> create iqn.2019-08.com.hna.iscsi-gw:iscsi-igw

创建iscsi-gateway eg:

1 2 3 4 /iscsi-target> cd iqn.2019-08.com.hna.iscsi-gw:iscsi-igw/gateways/iscsi-target...-igw/gateways> create ceph13 10.53.2.13 /iscsi-target...-igw/gateways> create ceph16 10.53.2.16 /iscsi-target...-igw/gateways> create ceph19 10.53.2.19

创建gateway时,输入的gateway名称为hostname, ip要与其对应上,并且在/etc/hosts文件中也要将hostname和ip对应上。

创建rbd image eg:

1 2 3 /iscsi-target...-igw/gateways> cd /disks /disks> create pool=sata image=vol_sata_23t size=23T /disks> create pool=ssd image=vol_ssd_1800g size=1800G

创建client iqn eg:

1 2 3 4 5 6 7 8 /disks> cd /iscsi-targets/iqn.2019-08.com.hna.iscsi-gw:iscsi-igw/hosts//iscsi-target...csi-igw/hosts> create iqn.2019-08.com.example:2b14c50a /disks> cd /iscsi-targets/iqn.2019-08.com.hna.iscsi-gw:iscsi-igw/hosts//iscsi-target...csi-igw/hosts> create iqn.2019-08.com.example:479172ae /disks> cd /iscsi-targets/iqn.2019-08.com.hna.iscsi-gw:iscsi-igw/hosts//iscsi-target...csi-igw/hosts> create iqn.2019-08.com.example:066f5c54

设置chap用户名密码

1 2 3 4 5 6 7 8 /> cd iscsi-targets/iqn.2019-08.com.hna.iscsi-gw:iscsi-igw/hosts/iqn.2019-08.com.example:2b14c50a//iscsi-target...mple:2b14c50a> auth username=iqn.2019-08.com.example password=1qaz2wsx3edc /> cd iscsi-targets/iqn.2019-08.com.hna.iscsi-gw:iscsi-igw/hosts/iqn.2019-08.com.example:479172ae//iscsi-target...mple:479172ae> auth username=iqn.2019-08.com.example password=1qaz2wsx3edc /> cd iscsi-targets/iqn.2019-08.com.hna.iscsi-gw:iscsi-igw/hosts/iqn.2019-08.com.example:066f5c54//iscsi-target...mple:066f5c54> auth username=iqn.2019-08.com.example password=1qaz2wsx3edc

创建host-groups eg:

1 2 /> cd iscsi-targets/iqn.2019-08.com.hna.iscsi-gw:iscsi-igw/host-groups//iscsi-target...w/host-groups> create xen

添加client iqn eg:

1 2 3 4 /> cd iscsi-targets/iqn.2019-08.com.hna.iscsi-gw:iscsi-igw/host-groups/xen//iscsi-target...st-groups/xen> host add iqn.2019-08.com.example:066f5c54 /iscsi-target...st-groups/xen> host add iqn.2019-08.com.example:2b14c50a /iscsi-target...st-groups/xen> host add iqn.2019-08.com.example:479172ae

添加rbd image eg:

1 2 3 /> cd iscsi-targets/iqn.2019-08.com.hna.iscsi-gw:iscsi-igw/host-groups/xen//iscsi-target...st-groups/xen> disk add sata/vol_sata_23t /iscsi-target...st-groups/xen> disk add ssd/vol_ssd_1800g

至此iscsi-gw的搭建及配置完成,客户端可以通过iscsi协议访问ceph存储了。

eg:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 /> ls o- / ......................................................................................................................... [...] o- cluster ......................................................................................................... [Clusters: 1] | o- ceph ............................................................................................................ [HEALTH_OK] | o- pools .......................................................................................................... [Pools: 3] | | o- rbd ................................................................... [(x3), Commit: 0.00Y/21703946M (0%), Used: 6823b] | | o- sata ......................................................... [(x3), Commit: 23.0T/21703946M (111%), Used: 14325219841b] | | o- ssd ........................................................ [(x3), Commit: 1800G/1744741760K (108%), Used: 27569961653b] | o- topology ............................................................................................... [OSDs: 24,MONs: 3] o- disks ...................................................................................................... [25352G, Disks: 2] | o- sata ......................................................................................................... [sata (23.0T)] | | o- vol_sata_23t .................................................................................. [sata/vol_sata_23t (23.0T)] | o- ssd ........................................................................................................... [ssd (1800G)] | o- vol_ssd_1800g ................................................................................. [ssd/vol_ssd_1800g (1800G)] o- iscsi-targets ............................................................................... [DiscoveryAuth: None, Targets: 1] o- iqn.2019-08.com.hna.iscsi-gw:iscsi-igw ........................................................................ [Gateways: 3] o- disks .......................................................................................................... [Disks: 2] | o- sata/vol_sata_23t ....................................................................................... [Owner: ceph13] | o- ssd/vol_ssd_1800g ....................................................................................... [Owner: ceph19] o- gateways ............................................................................................ [Up: 3/3, Portals: 3] | o- ceph13 ................................................................................................ [10.53.2.13 (UP)] | o- ceph16 ................................................................................................ [10.53.2.16 (UP)] | o- ceph19 ................................................................................................ [10.53.2.19 (UP)] o- host-groups .................................................................................................. [Groups : 1] | o- xen ................................................................................................ [Hosts: 3, Disks: 2] | o- iqn.2019-08.com.example:066f5c54 ............................................................................... [host] | o- iqn.2019-08.com.example:2b14c50a ............................................................................... [host] | o- iqn.2019-08.com.example:479172ae ............................................................................... [host] | o- sata/vol_sata_23t .............................................................................................. [disk] | o- ssd/vol_ssd_1800g .............................................................................................. [disk] o- hosts .............................................................................................. [Hosts: 3: Auth: CHAP] o- iqn.2019-08.com.example:2b14c50a .............................................. [LOGGED-IN, Auth: CHAP, Disks: 2(25352G)] | o- lun 0 ....................................................................... [sata/vol_sata_23t(23.0T), Owner: ceph13] | o- lun 1 ....................................................................... [ssd/vol_ssd_1800g(1800G), Owner: ceph19] o- iqn.2019-08.com.example:479172ae .............................................. [LOGGED-IN, Auth: CHAP, Disks: 2(25352G)] | o- lun 0 ....................................................................... [sata/vol_sata_23t(23.0T), Owner: ceph13] | o- lun 1 ....................................................................... [ssd/vol_ssd_1800g(1800G), Owner: ceph19] o- iqn.2019-08.com.example:066f5c54 .............................................. [LOGGED-IN, Auth: CHAP, Disks: 2(25352G)] o- lun 0 ....................................................................... [sata/vol_sata_23t(23.0T), Owner: ceph13] o- lun 1 ....................................................................... [ssd/vol_ssd_1800g(1800G), Owner: ceph19]

参考&鸣谢