分布式系统在极大提高可用性、容错性的同时,带来了一致性问题(CAP理论)。Raft算法能够解决分布式系统环境下的一致性问题。一致性是分布式系统容错的基本问题。一致性涉及多个服务器状态(Values)达成一致。 一旦他们就状态做出决定,该决定就是最终决定。 当大多数服务器可用时,典型的一致性算法会取得进展。

raft是工程上使用较为广泛的强一致性、去中心化、高可用的分布式协议。在这里强调了是在工程上,因为在学术理论界,最耀眼的还是大名鼎鼎的Paxos。但Paxos是:少数真正理解的人觉得简单,尚未理解的人觉得很难,大多数人都是一知半解。本人也花了很多时间、看了很多材料也没有真正理解。直到看到raft的论文,两位研究者也提到,他们也花了很长的时间来理解Paxos,他们也觉得很难理解,于是研究出了raft算法。

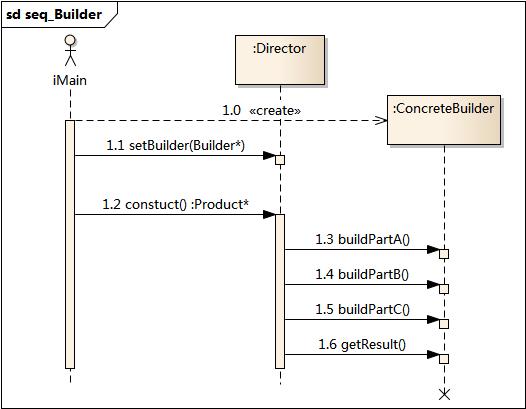

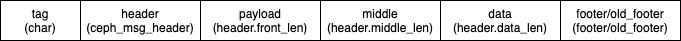

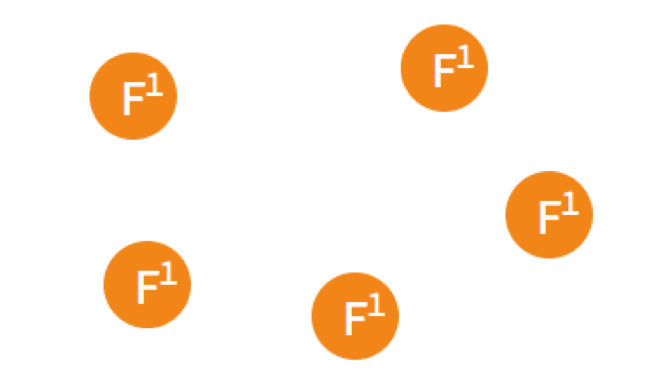

Leader选举

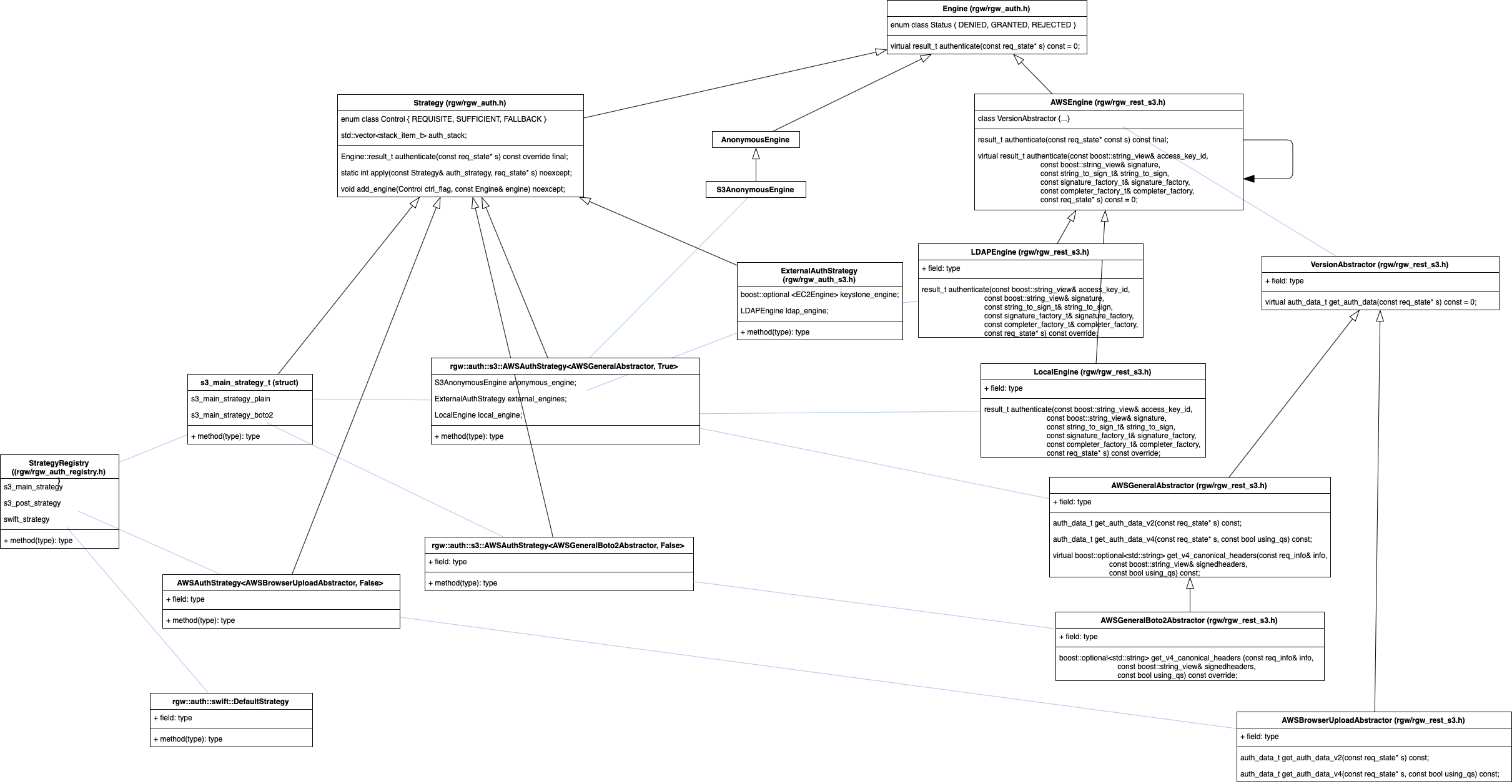

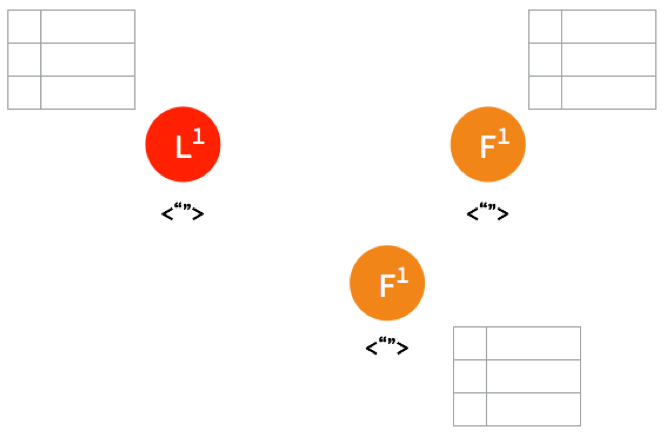

raft协议中,一个节点任一时刻处于leader, follower, candidate三个角色之一。

- leader 接受客户端请求,并向Follower同步请求日志,当日志同步到大多数节点上后告诉Follower提交日志。

- follower 接受并持久化Leader同步的日志,在Leader告之日志可以提交之后,提交日志。

- candidate Leader选举过程中的临时角色。

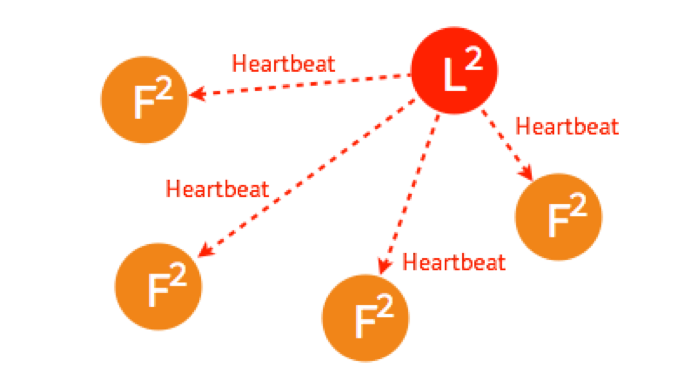

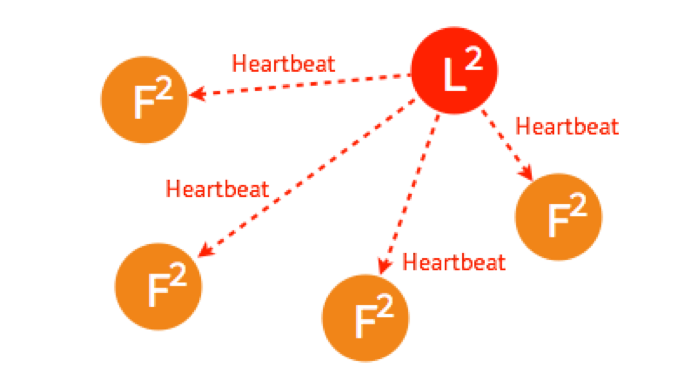

每个节点以follower角色开始,如果follower超时没有收到leader的消息,它会进入candidate角色,并发起选举投票。如果candidate收到的票数超过半数以上,则切换为leader角色。如果发现其他节点比自己更新,则主动切换到follower。总之,系统中最多只有一个leader,如果在一段时间里发现没有leader,则大家通过选举-投票选出leader。leader会不停的给follower发心跳消息,表明自己的存活状态。如果leader故障,那么follower会转换成candidate,重新选出leader。

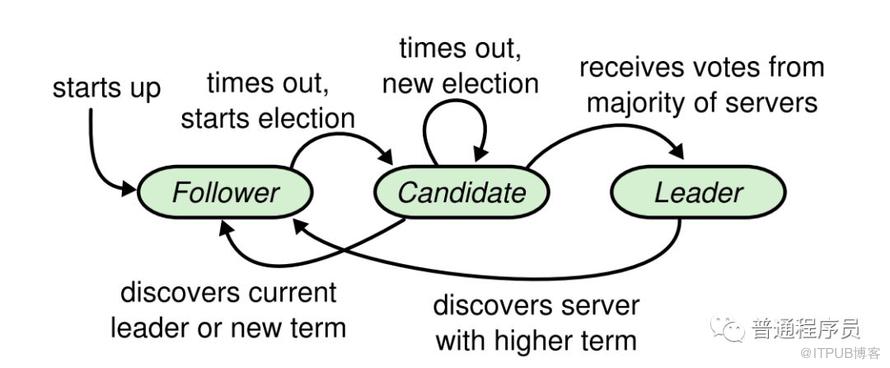

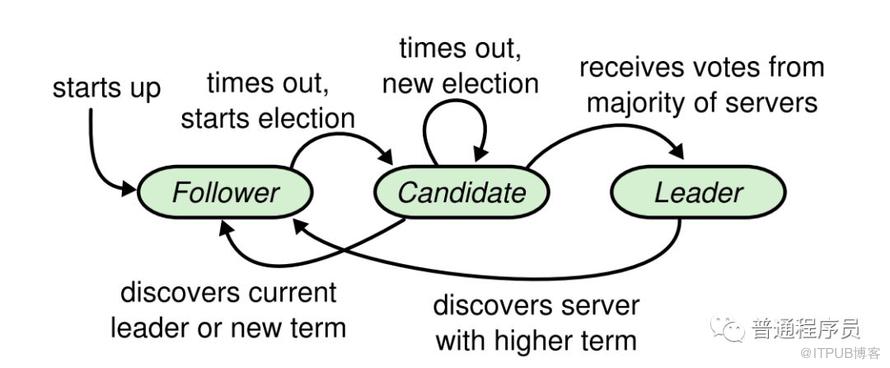

leader是大家投票选举出来的,每个leader工作一段时间,然后选出新的leader继续负责。这根民主社会的选举很像,每一届新的履职期称之为一届任期,在raft协议中,也是这样的,对应的术语叫term。term(任期)以选举(election)开始,然后就是一段或长或短的稳定工作期(normal Operation)。从上图可以看到,任期是递增的,这就充当了逻辑时钟的作用;另外,term 3展示了一种情况,就是说没有选举出leader就结束了,然后会发起新的选举。

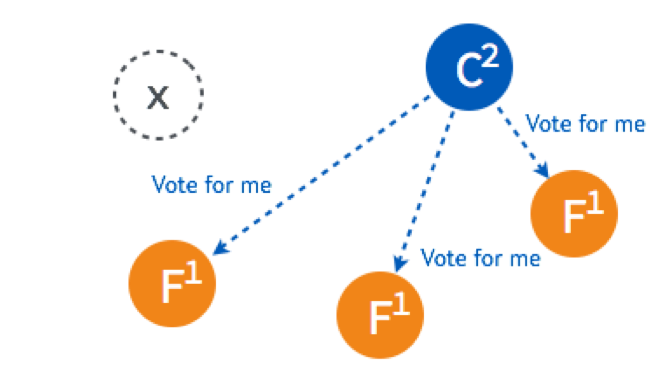

选举过程

正常情况下选举

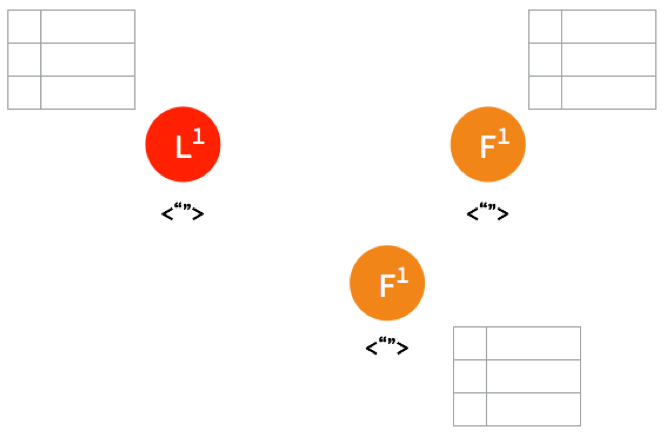

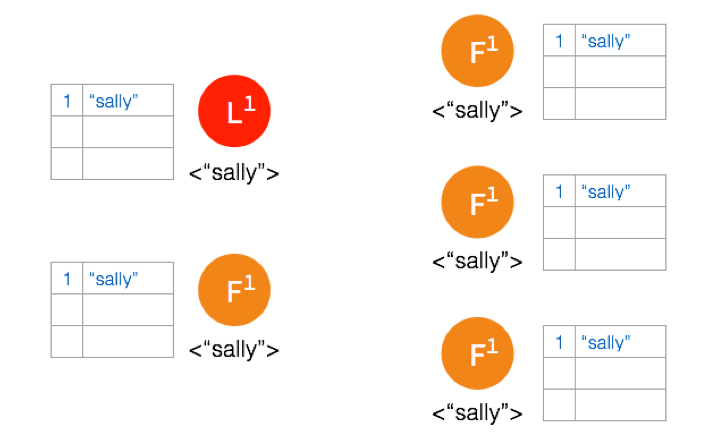

5个节点一开始的状态都是Follower。

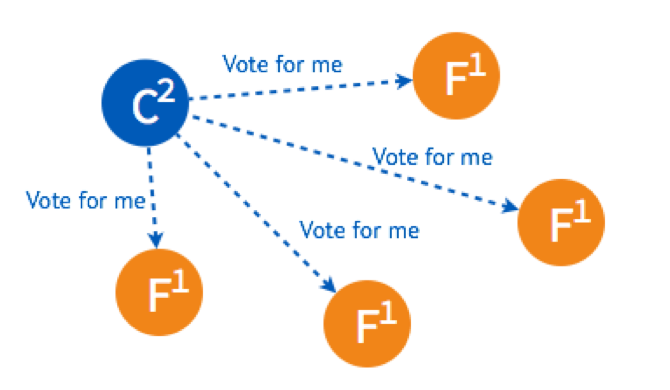

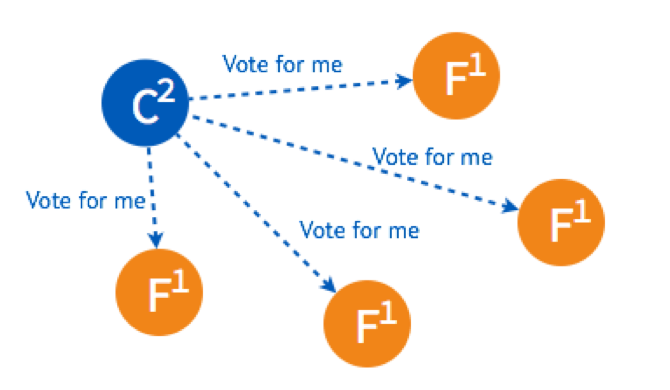

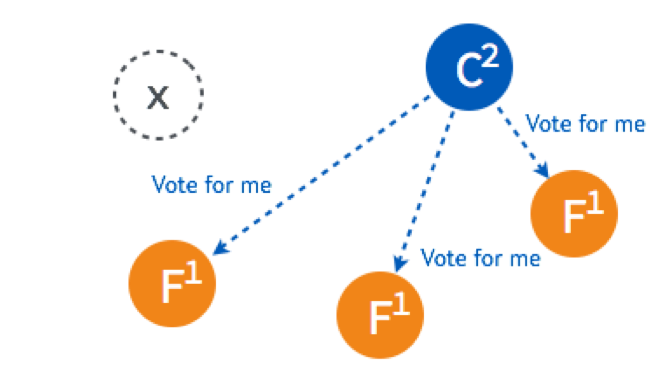

在一个节点倒计时结束(Timeout) 后,这个节点的状态变成Candidate开始选举,它给其他几个节点发送选举请求(RequestVote)

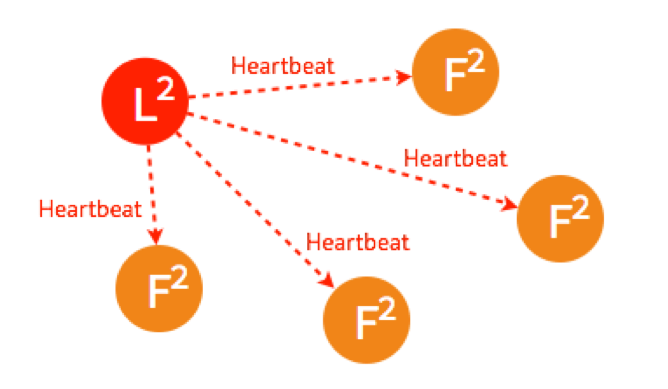

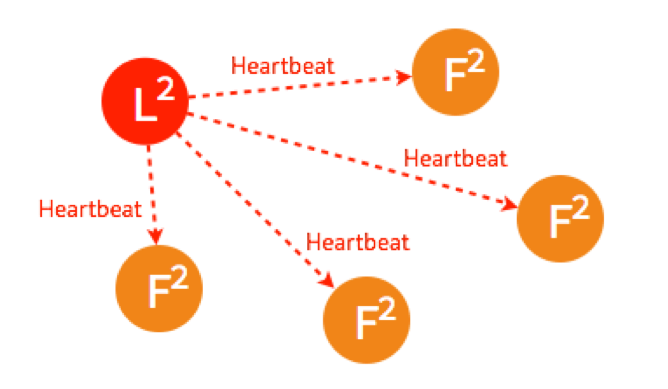

其他四个节点都返回成功,这个节点的状态由Candidate变成了Leader,并在每个一小段时间后,就给所有的Follower发送一个 Heartbeat 以保持所有节点的状态,Follower收到Leader的Heartbeat后重设Timeout。

只要有超过一半的节点投支持票了,Candidate才会被选举为Leader,5个节点的情况下,3个节点 (包括Candidate本身) 投了支持就行。

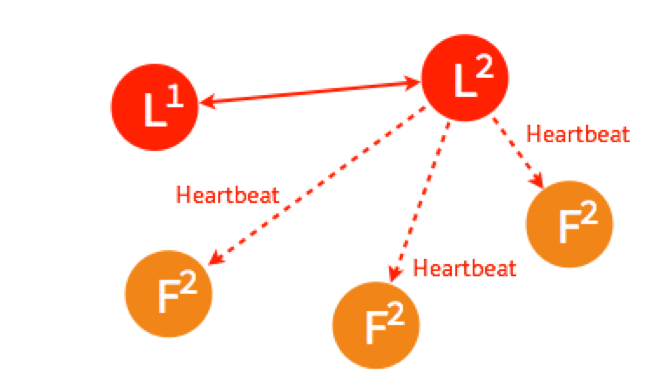

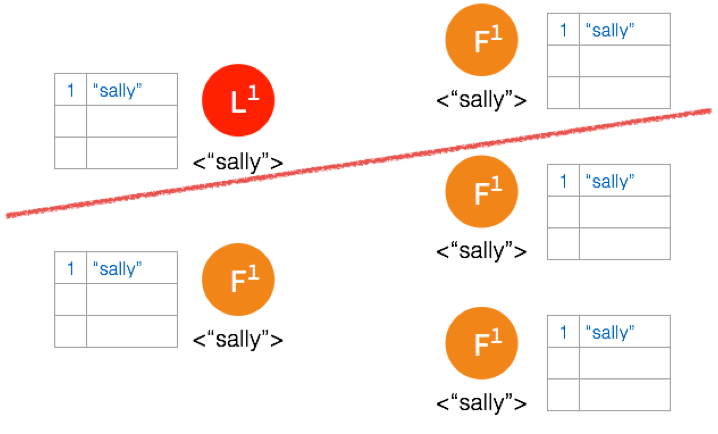

Leader 出故障情况下的选举

leader出故障挂掉了,其他四个follower将进行重新选主。

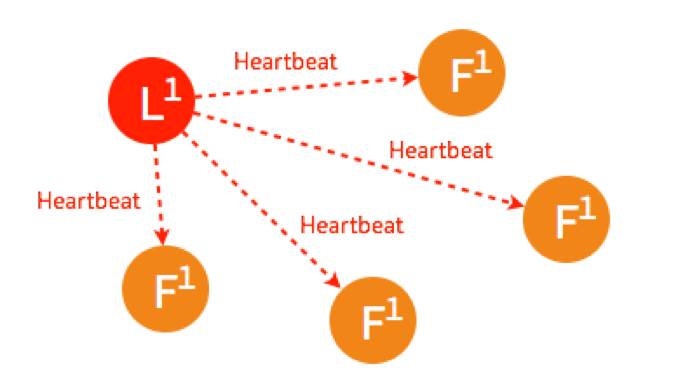

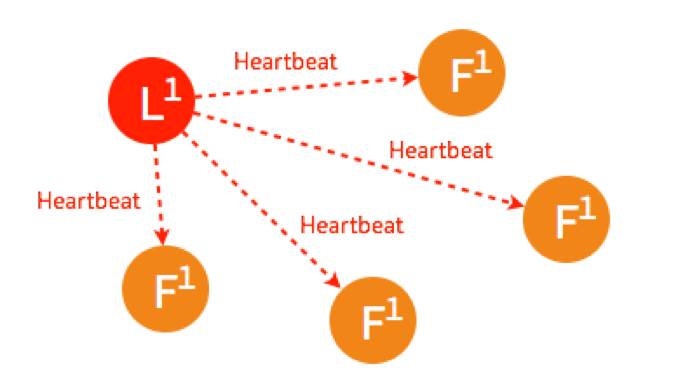

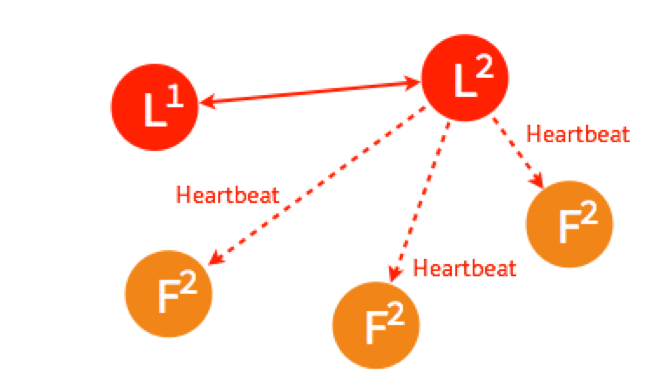

4个节点的选主过程和5个节点的类似,在选出一个新的leader后,原来的Leader恢复了又重新加入了,这个时候怎么处理?在Raft里,第几轮选举是有记录的,重新加入的Leader是第一轮选举(Term 1)选出来的,而现在的Leader则是Term 2,所有原来的Leader会自觉降级为Follower

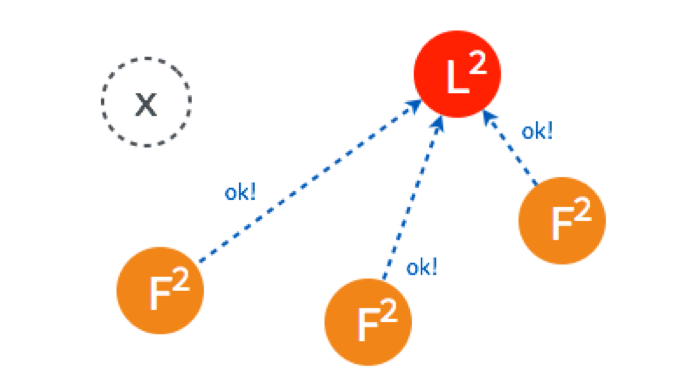

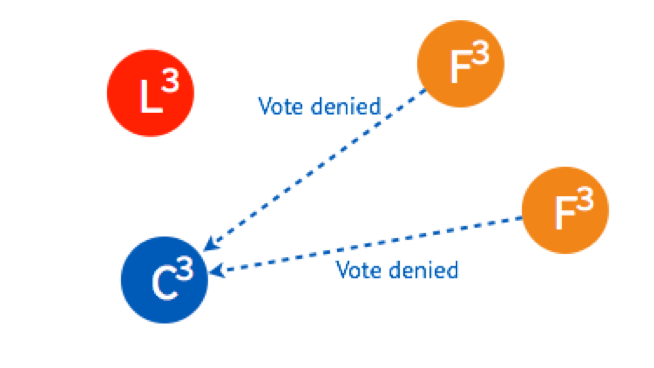

多个Candidate情况下的Leader选举

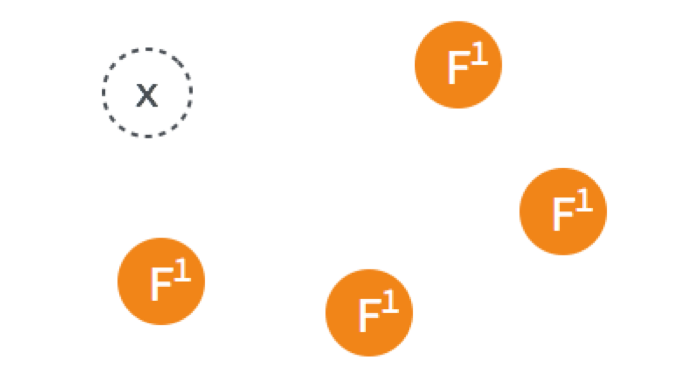

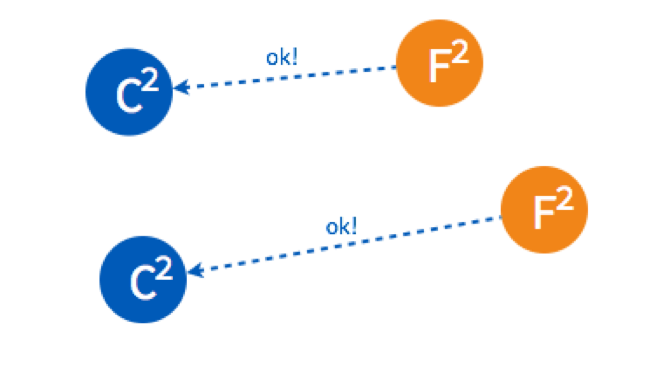

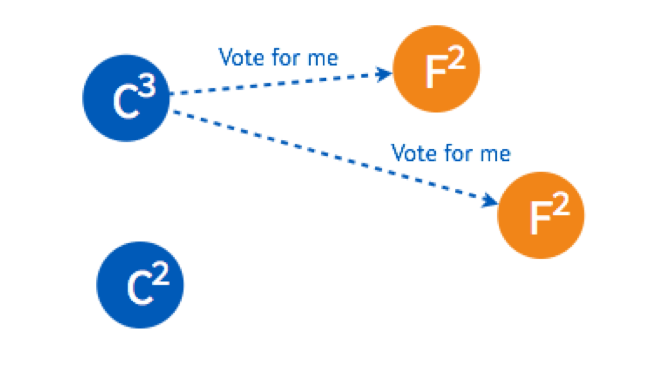

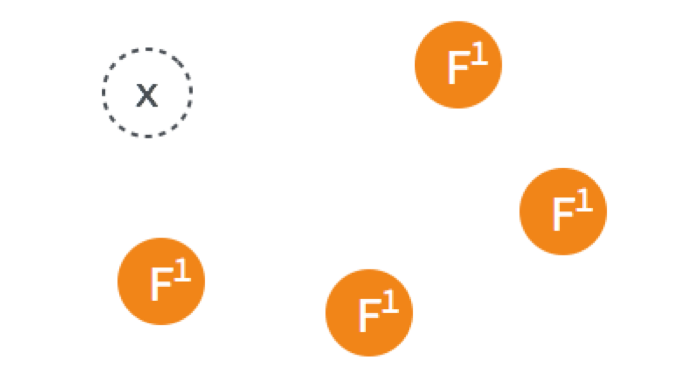

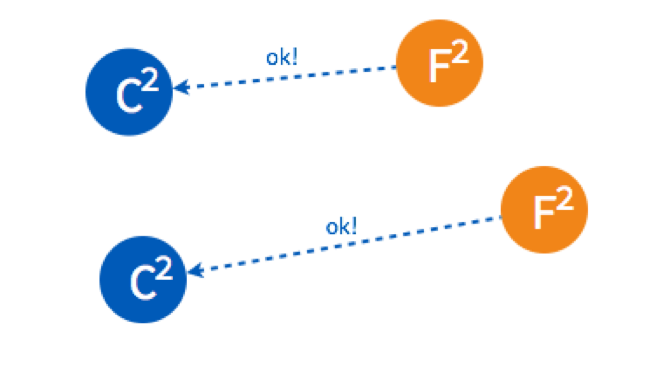

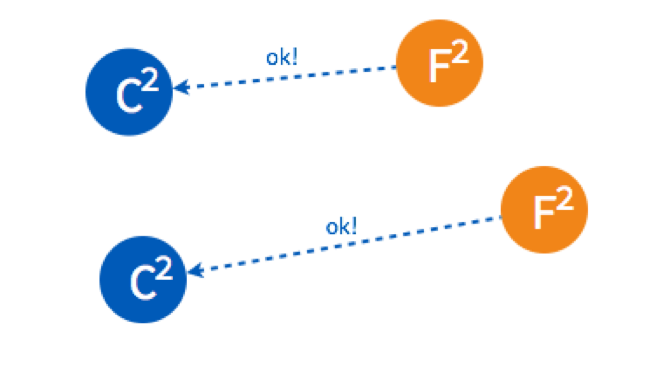

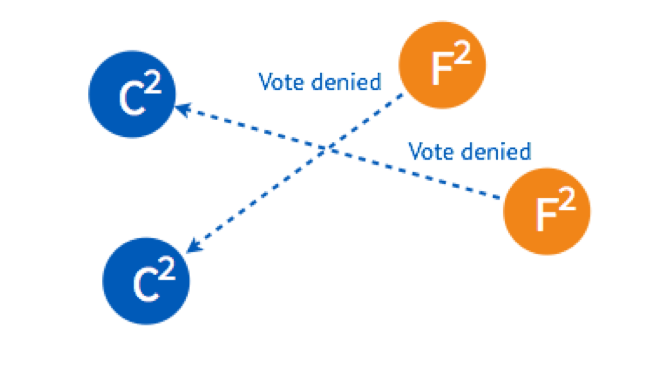

有两个Follower同时Timeout,都变成了Candidate开始选举,分别给一个Follower发送了投票请求。

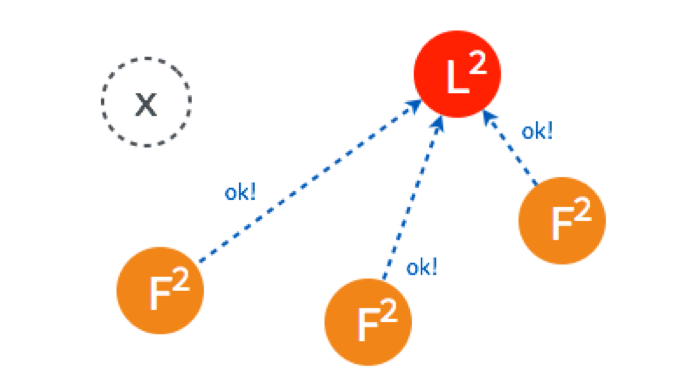

两个Follower分别返回了ok,这时两个Candidate都只有2票,要3票才能被选成Leader。

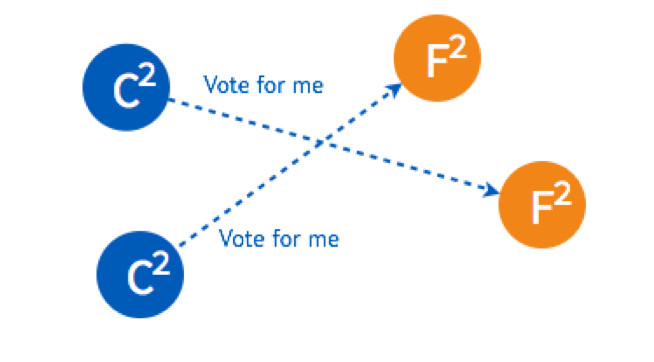

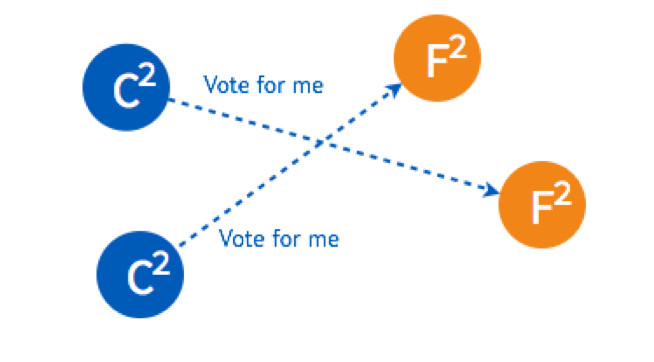

两个Candidate会分别给另外一个还没有给自己投票的Follower发送投票请求。

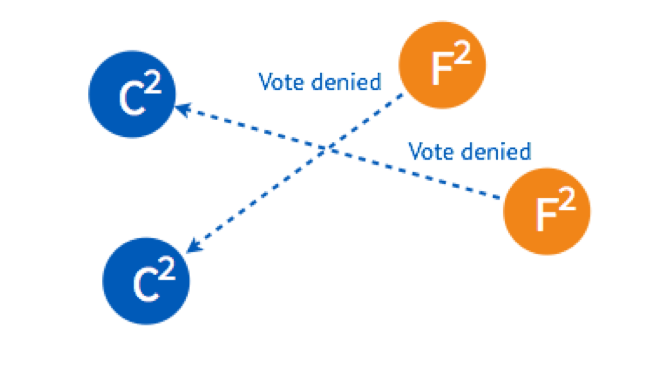

但是因为Follower在这一轮选举中,都已经投完票了,所以都拒绝了他们的请求。所以在Term 2没有Leader被选出来。

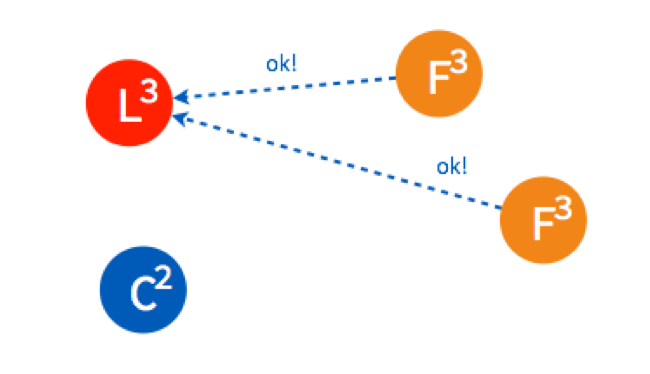

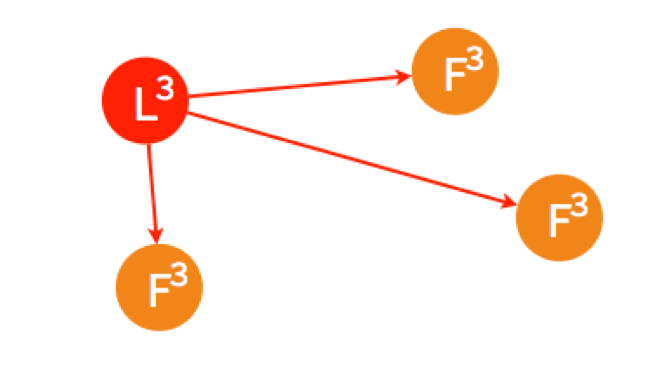

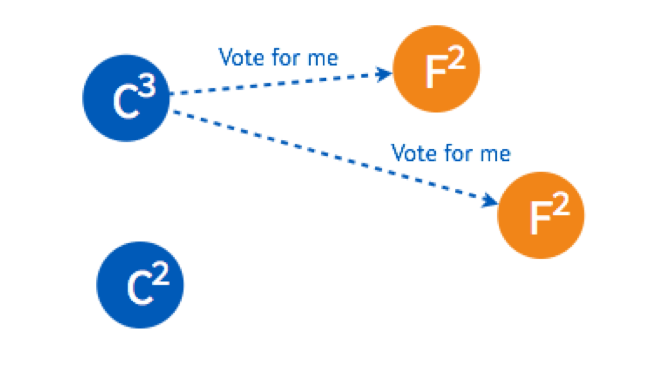

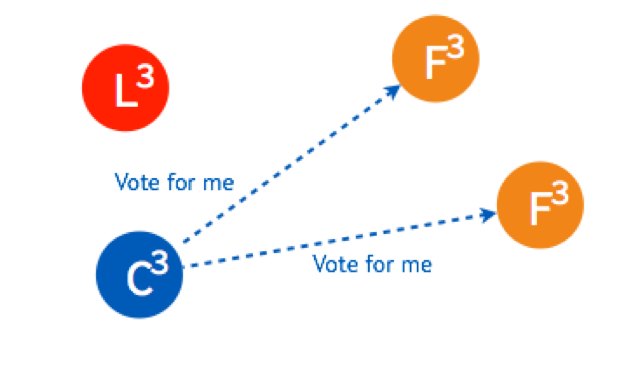

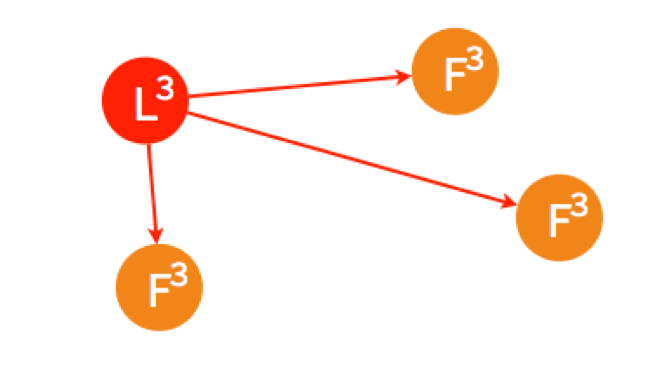

这时,两个节点的状态是Candidate,两个是Follower,但是他们的倒计时器仍然在运行,最先Timeout的那个节点会进行发起新一轮Term 3的投票。

两个Follower在Term 3还没投过票,所以返回OK,这时Candidate一共有三票,被选为了Leader。

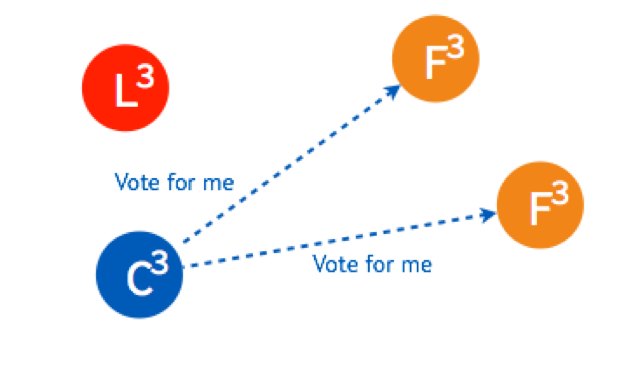

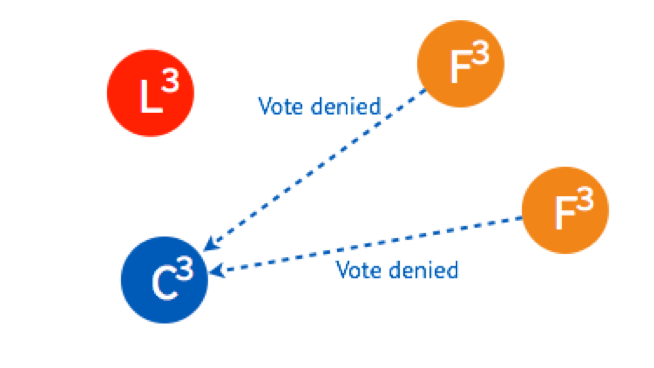

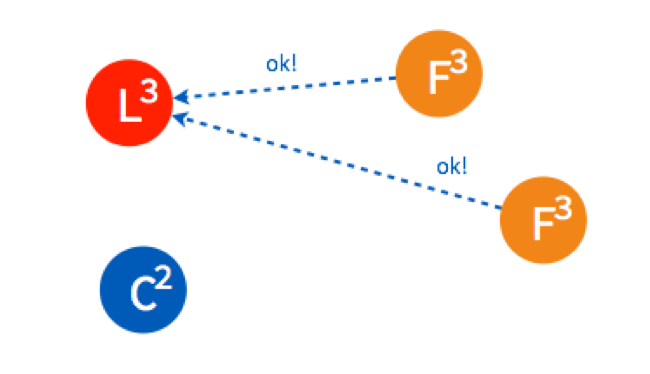

如果Leader Heartbeat的时间晚于另外一个Candidate timeout的时间,另外一个Candidate仍然会发送选举请求。

两个Follower已经投完票了,拒绝了这个Candidate的投票请求。

Leader进行Heartbeat,Candidate收到后状态自动转为Follower,完成选举。

日志复制

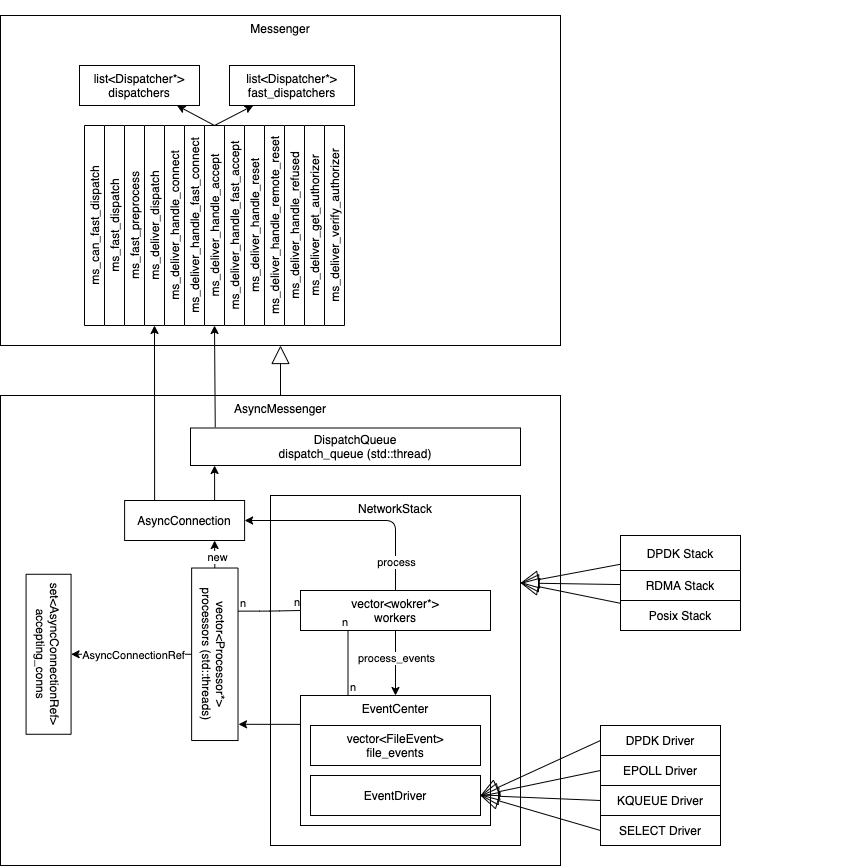

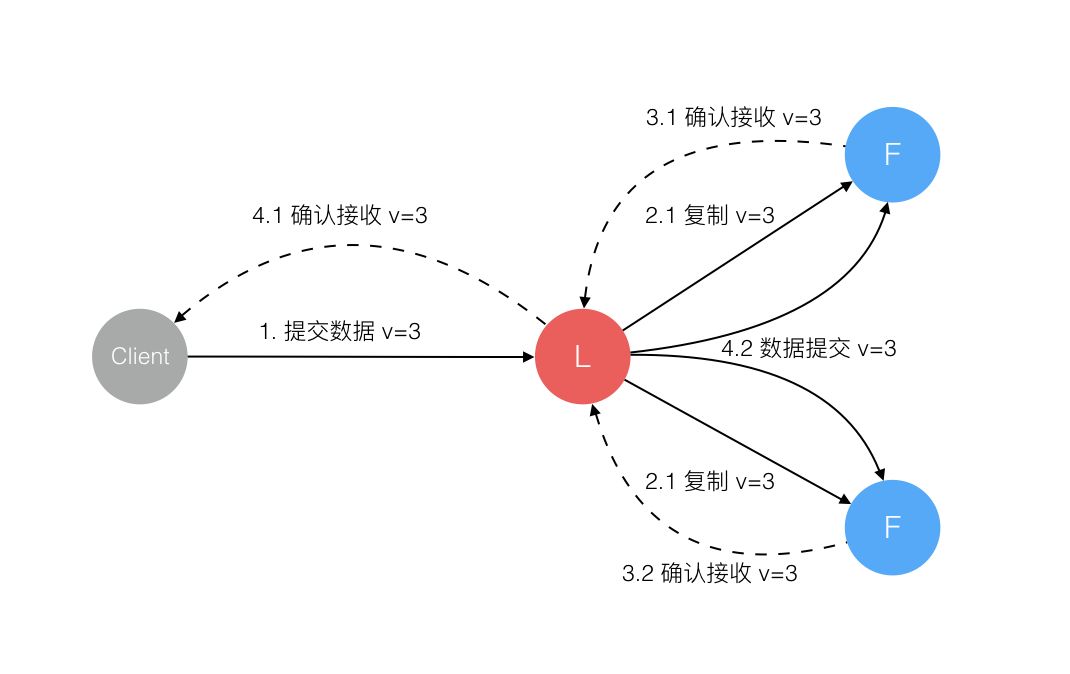

Raft 在实际应用场景中的一致性更多的是体现在不同节点之间的数据一致性,客户端发送请求到任何一个节点都能收到一致的返回,当一个节点出故障后,其他节点仍然能以已有的数据正常进行。在选主之后的复制日志就是为了达到这个目的。

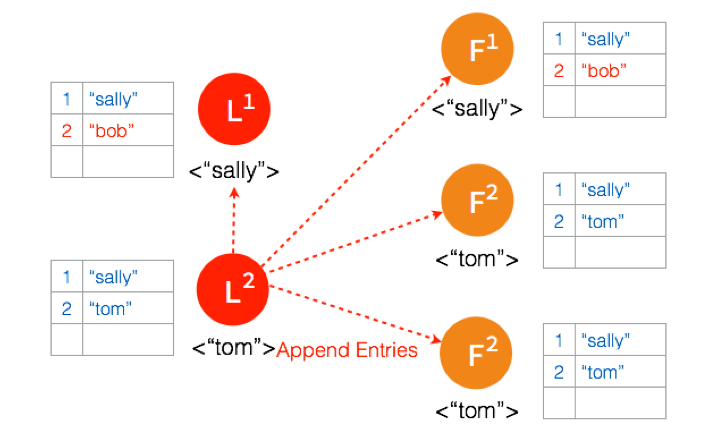

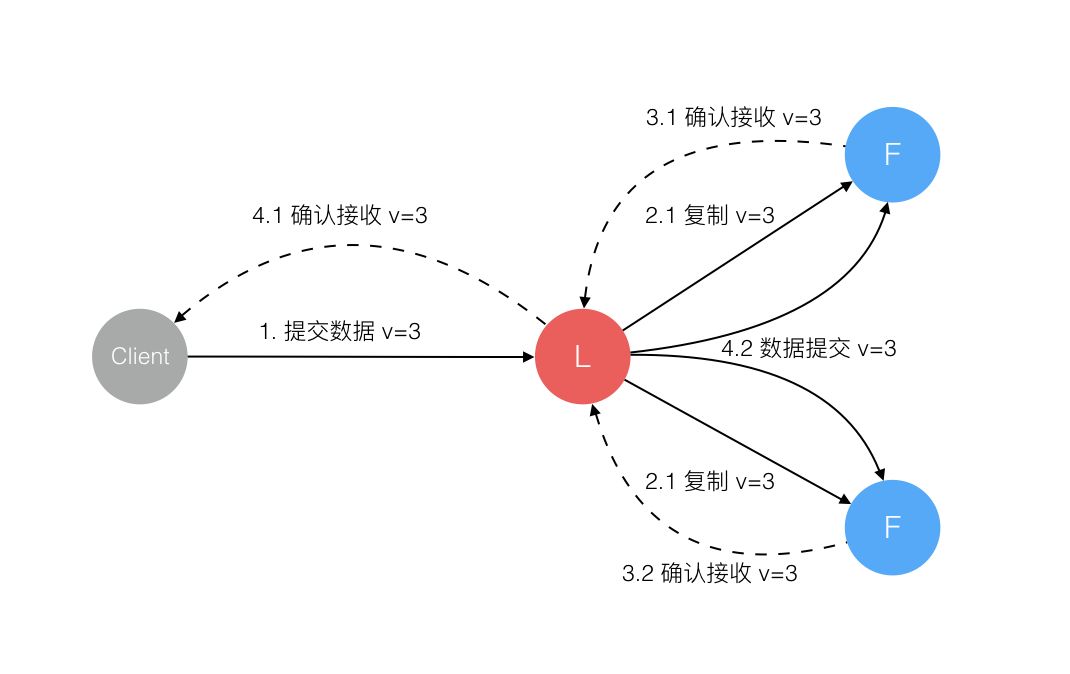

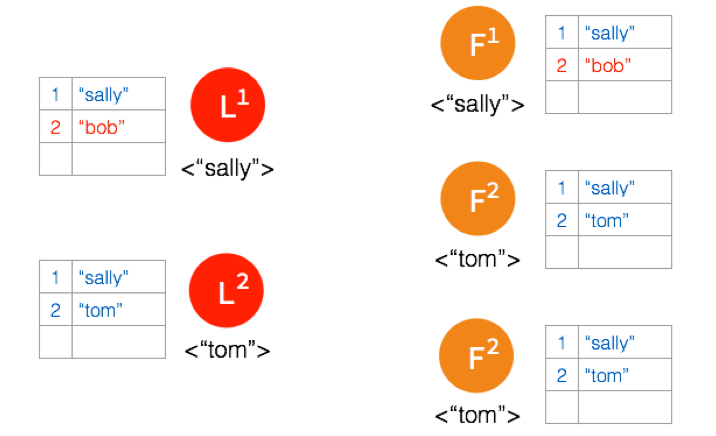

正常情况下日志复制过程

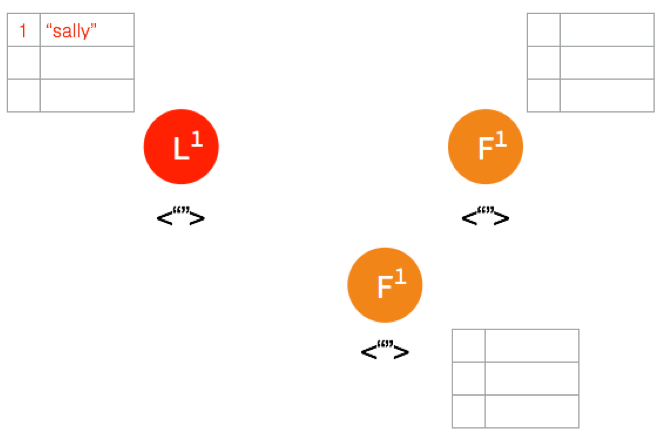

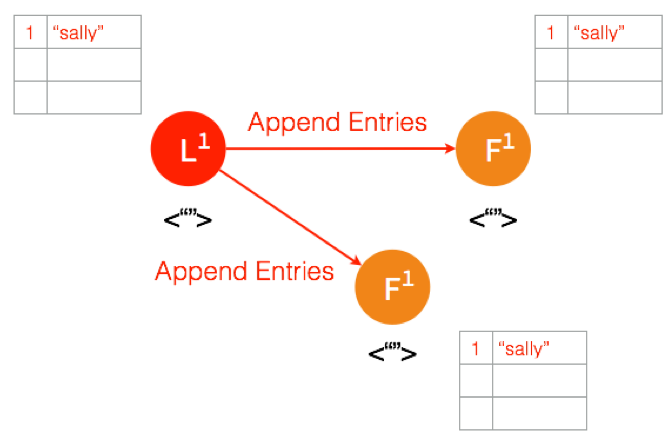

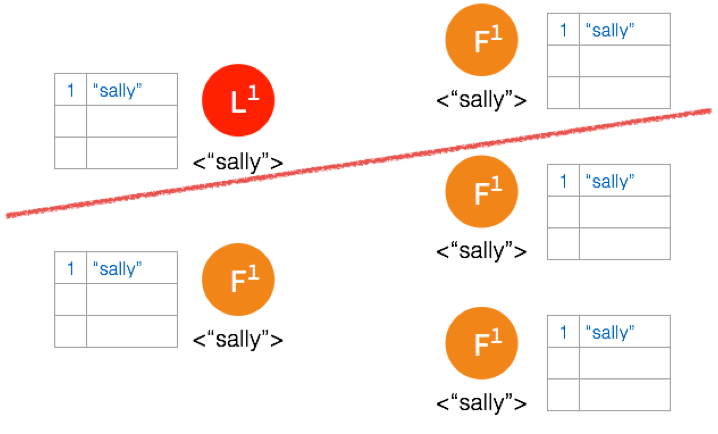

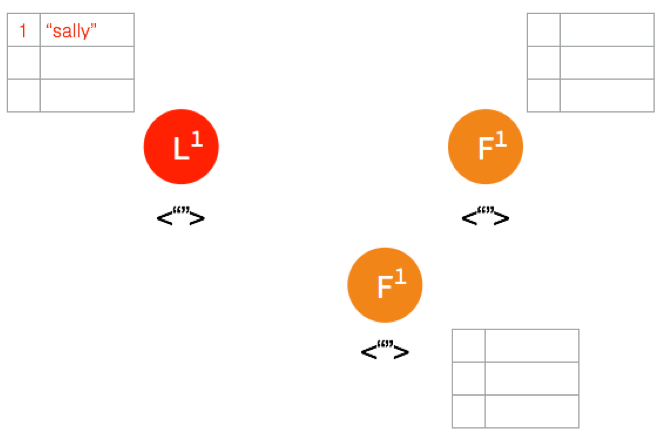

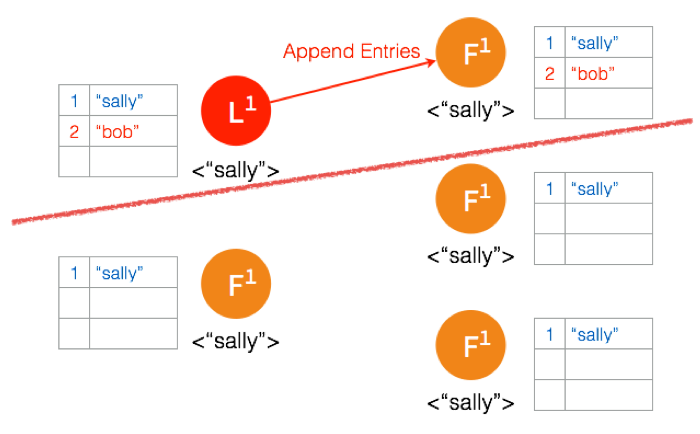

客户端发送请求给Leader,储存数据 “sally”,Leader先将数据写在本地日志,这时候数据还是Uncommitted (还没最终确认,红色表示)

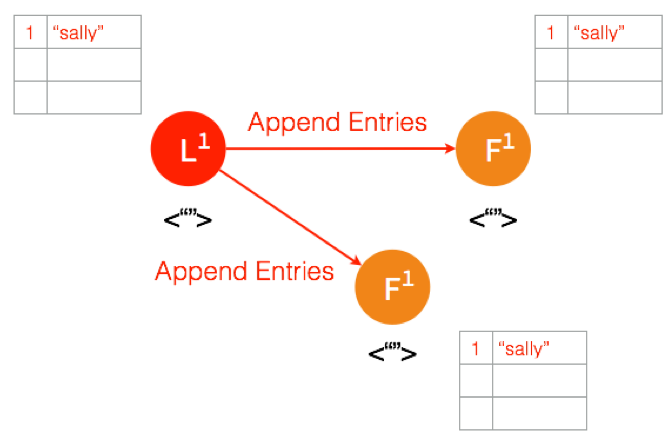

Leader给两个Follower发送AppendEntries请求,数据在Follower上没有冲突,则将数据暂时写在本地日志,Follower的数据也还是Uncommitted。

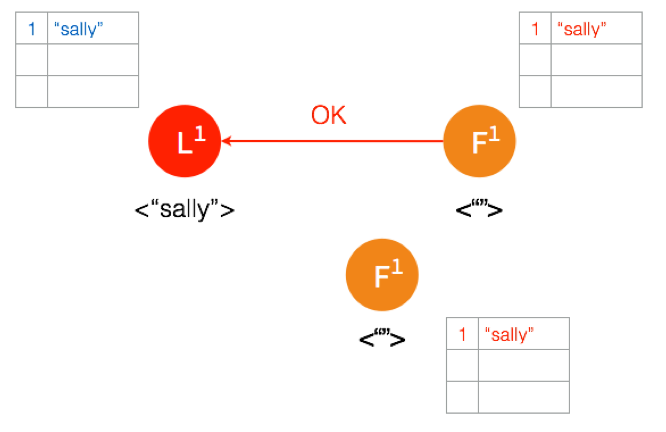

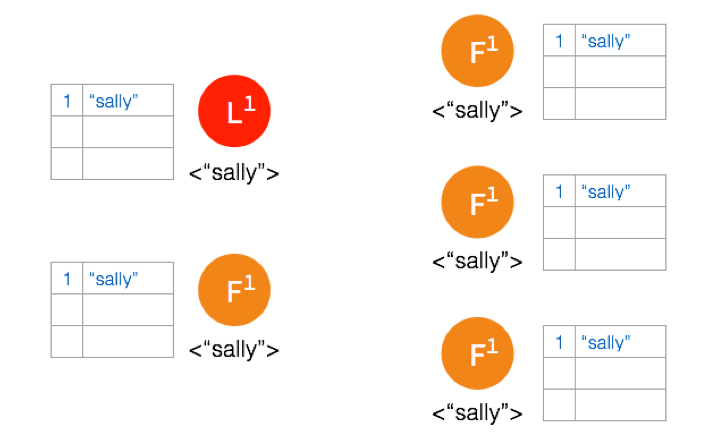

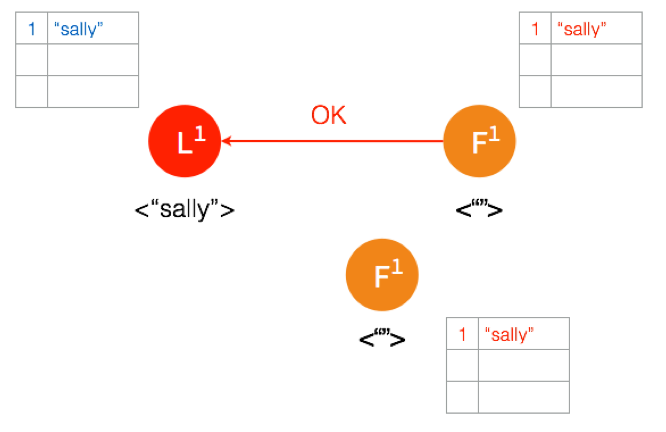

Follower将数据写到本地后,返回OK。Leader收到后成功返回,只要收到的成功的返回数量超过半数(包含Leader),Leader将数据 “sally” 的状态改成Committed。( 这个时候Leader就可以返回给客户端了)

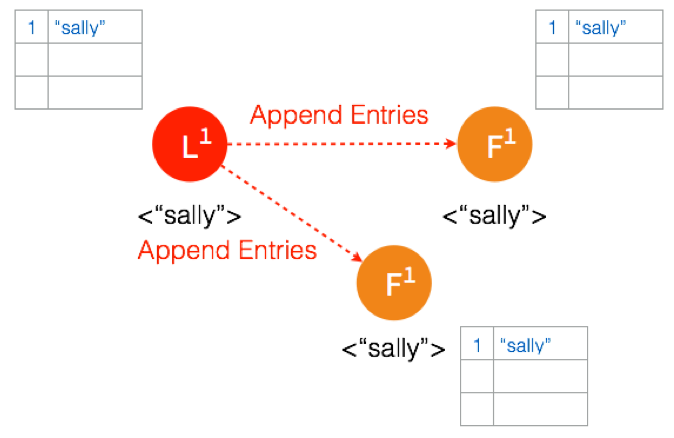

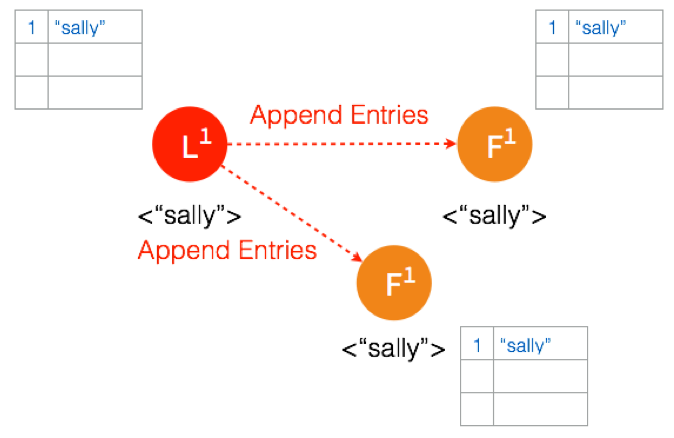

Leader再次给Follower发送AppendEntries请求,收到请求后,Follower将本地日志里Uncommitted数据改成Committed。这样就完成了一整个复制日志的过程,三个节点的数据是一致的

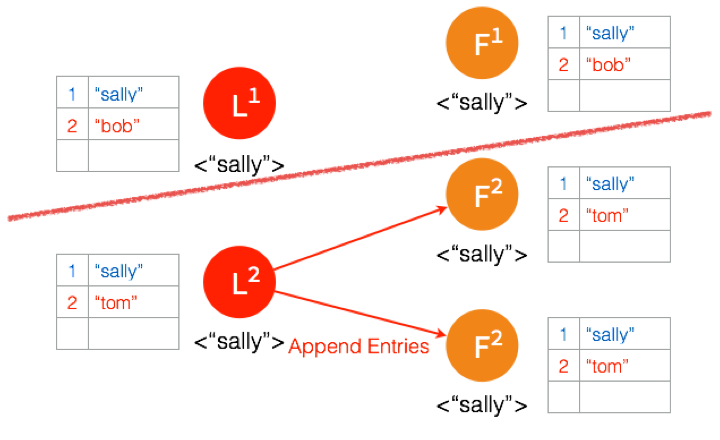

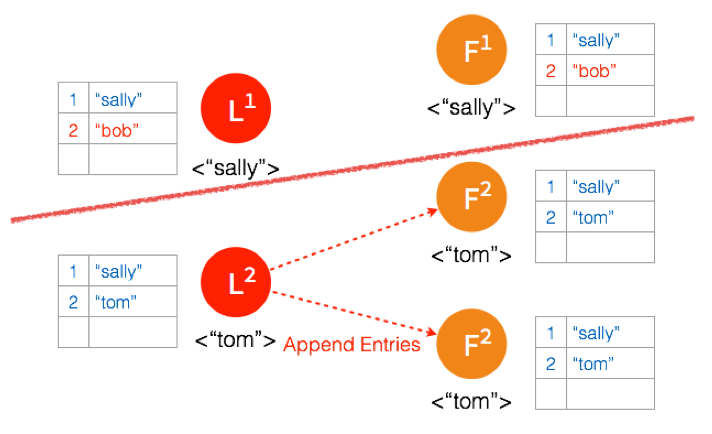

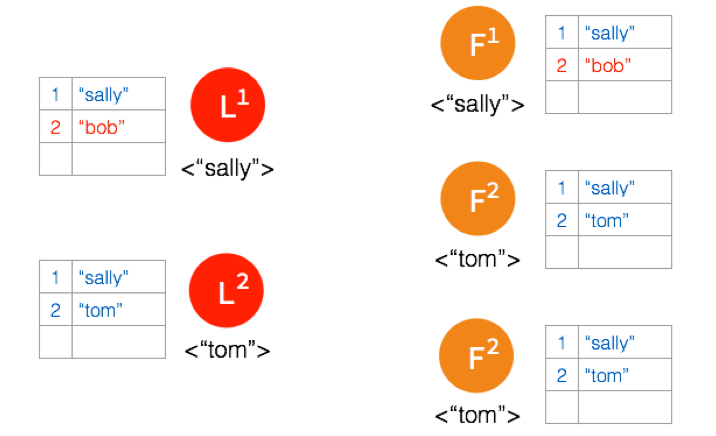

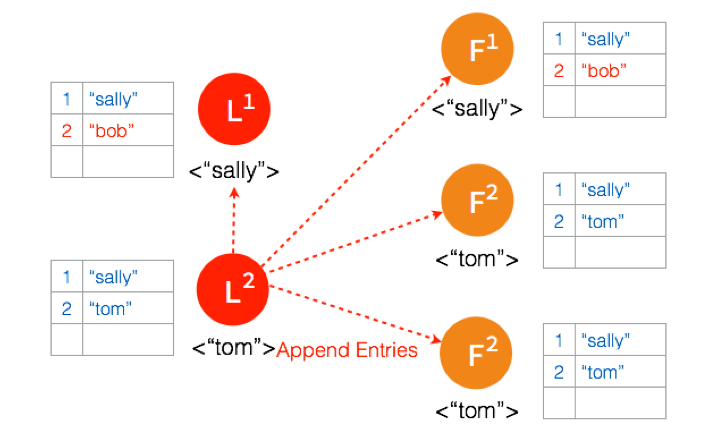

Network Partition情况下日志复制过程

在Network Partition的情况下,部分节点之间没办法互相通信,Raft 也能保证在这种情况下数据的一致性。

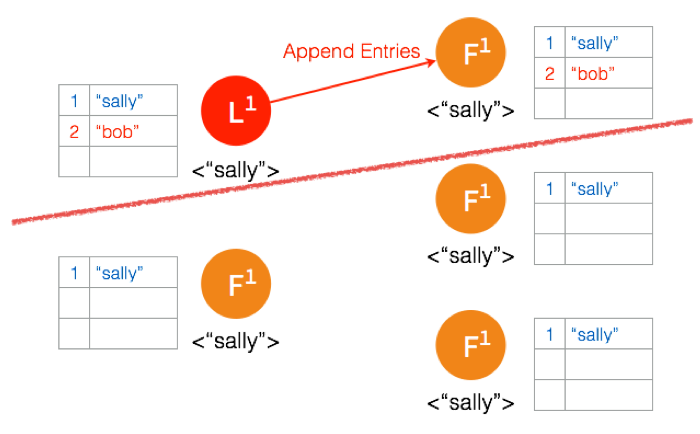

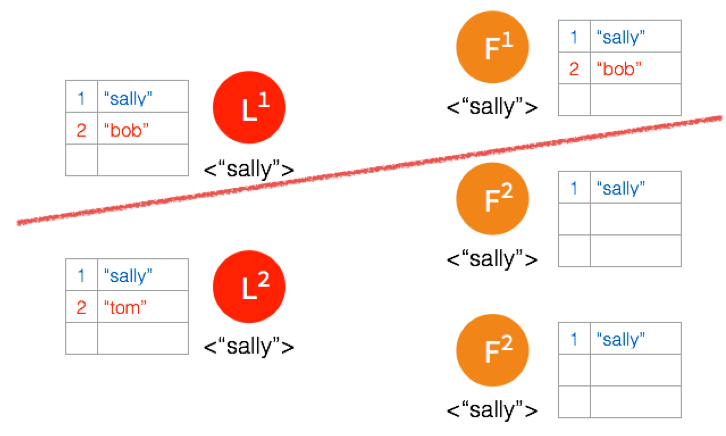

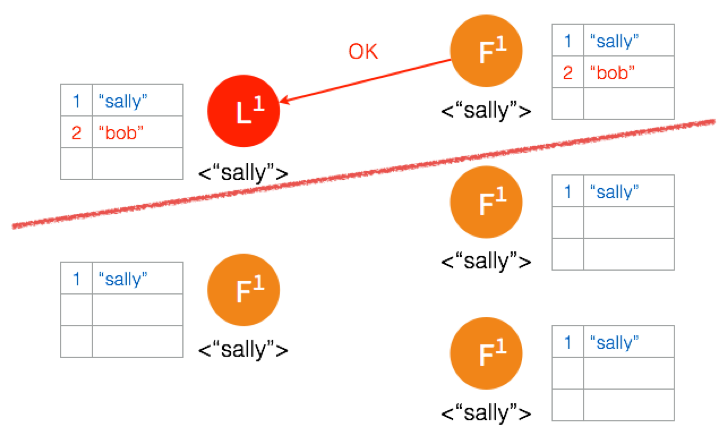

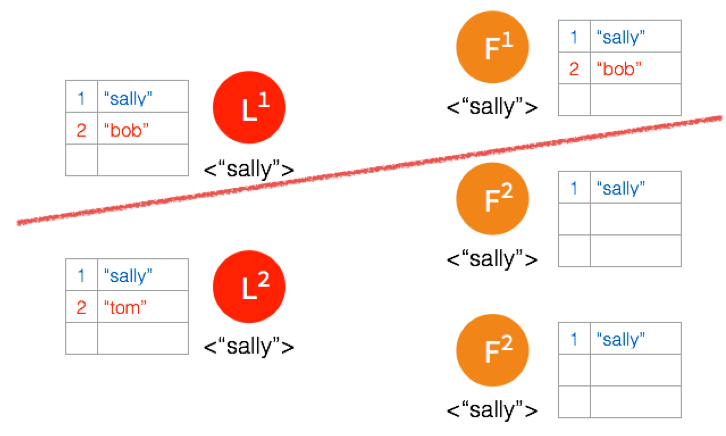

Network Partition将节点分成两边,一边有两个节点,一边三个节点。

两个节点这边已经有Leader了,来自客户端的数据“bob”通过Leader同步到Follower。

因为只有两个节点,少于3个节点,所以“bob”的状态仍是Uncommitted。所以在这里,服务器会返回错误给客户端

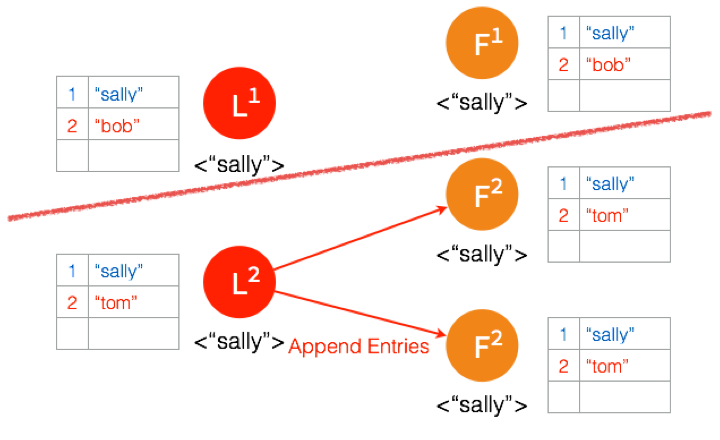

另外一个Partition有三个节点,进行重新选主。客户端数据“tom”发到新的Leader,通过和上节网络状态下相似的过程,同步到另外两个Follower。

因为这个Partition有3个节点,超过半数,所以数据“tom”都Commit了。

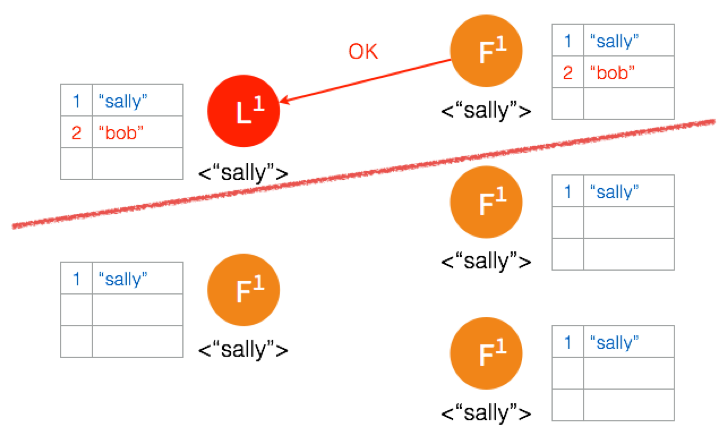

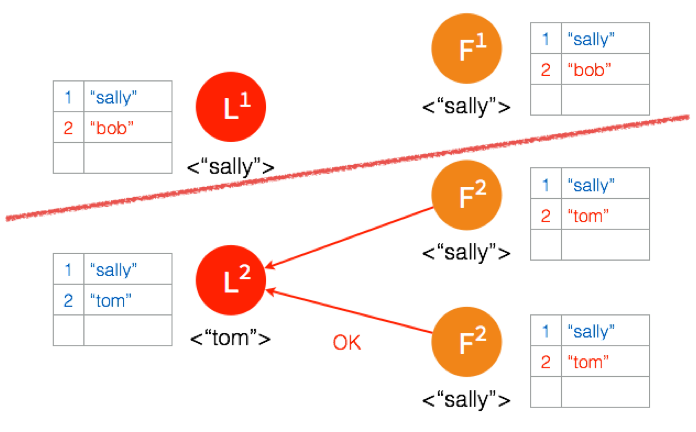

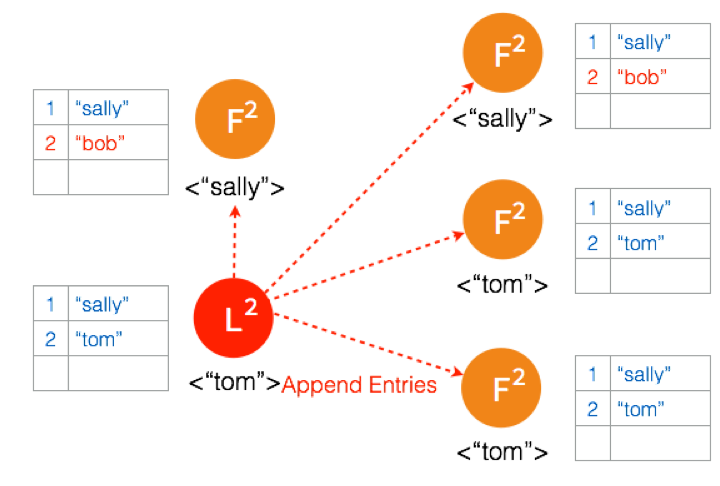

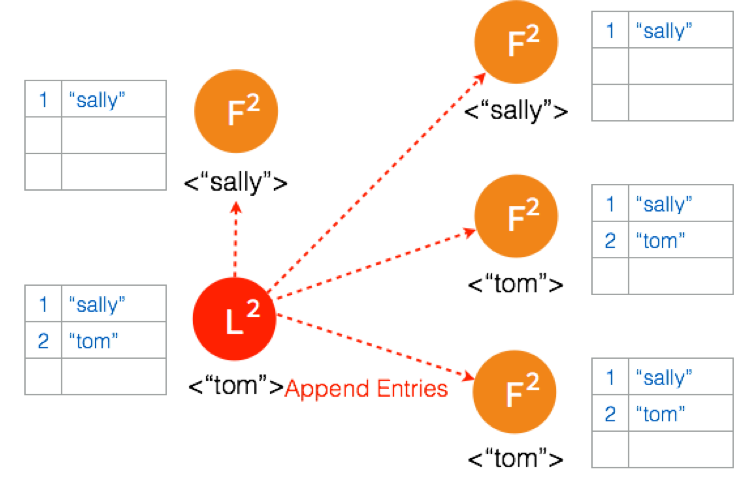

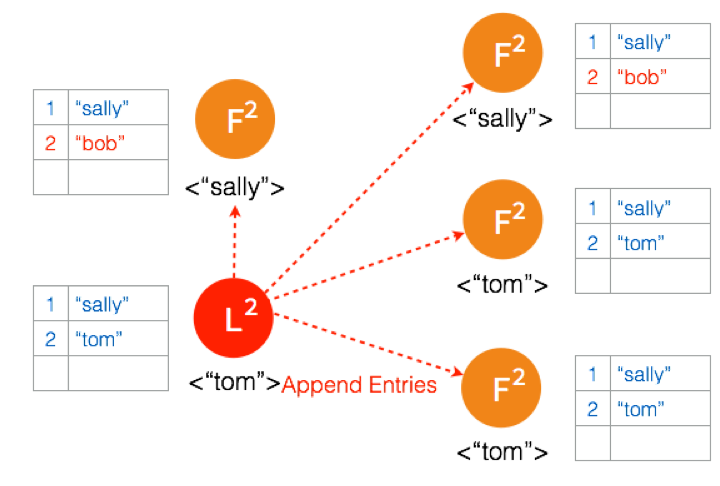

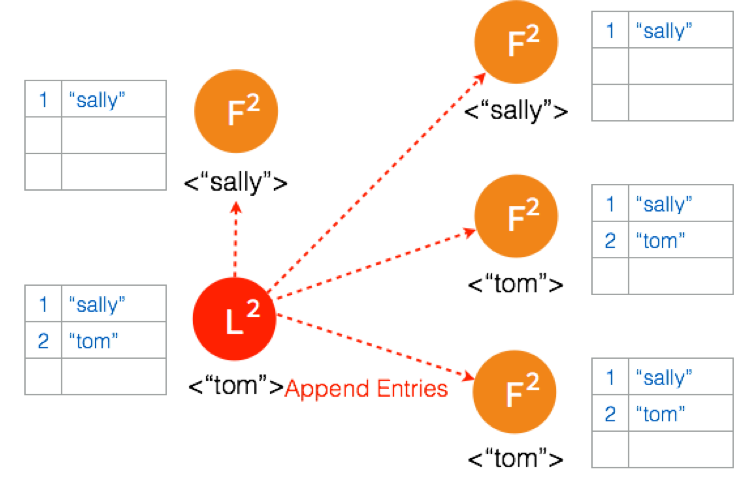

网络状态恢复,5个节点再次处于同一个网络状态下。但是这里出现了数据冲突“bob”和“tom”

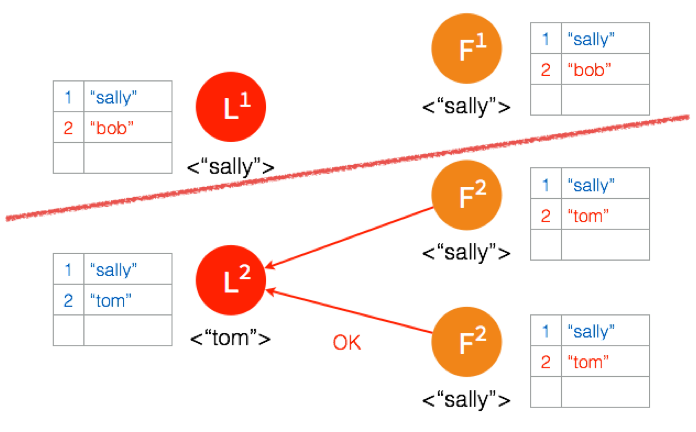

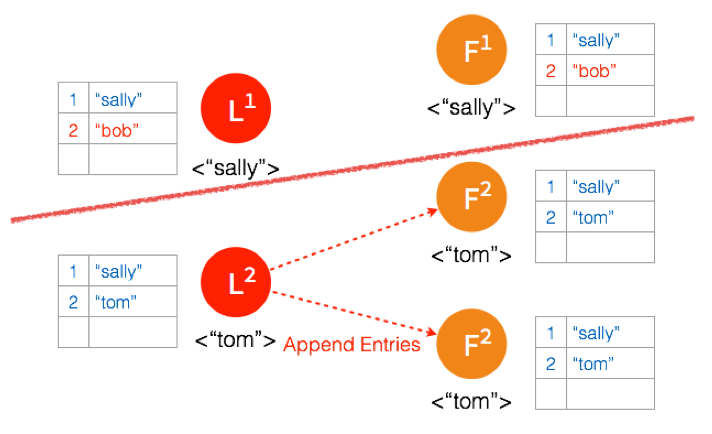

三个节点的Leader广播AppendEntries

两个节点Partition的Leader自动降级为Follower,因为这个Partition的数据 “bob” 没有Commit,返回给客户端的是错误,客户端知道请求没有成功,所以Follower在收到AppendEntries请求时,可以把“bob“删除,然后同步”tom”,通过这么一个过程,就完成了在Network Partition情况下的复制日志,保证了数据的一致性。

安全性

Election safety

选举安全性,即任一任期内最多一个leader被选出。这一点非常重要,在一个复制集中任何时刻只能有一个leader。系统中同时有多余一个leader,被称之为脑裂(brain split),这是非常严重的问题,会导致数据的覆盖丢失。

- 一个节点某一任期内最多只能投一票

- 只有获得多数票的节点才会成为

leader

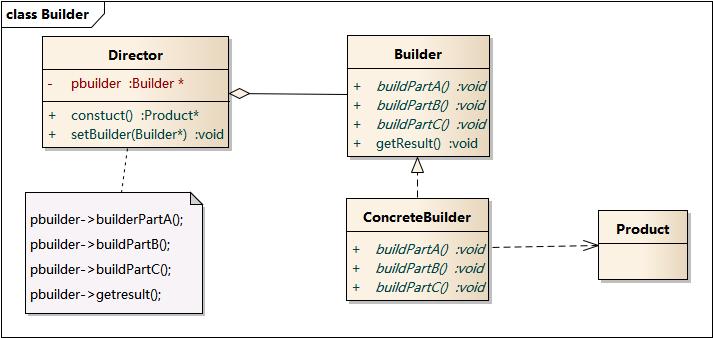

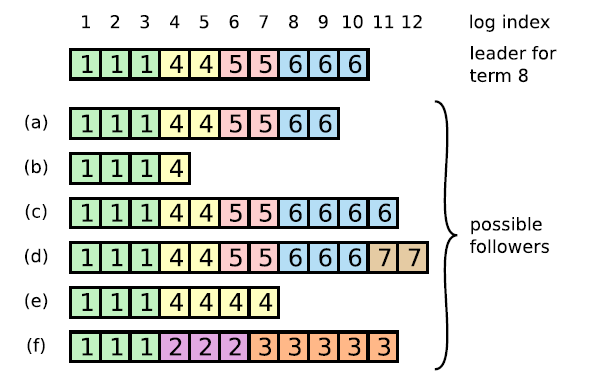

log matching

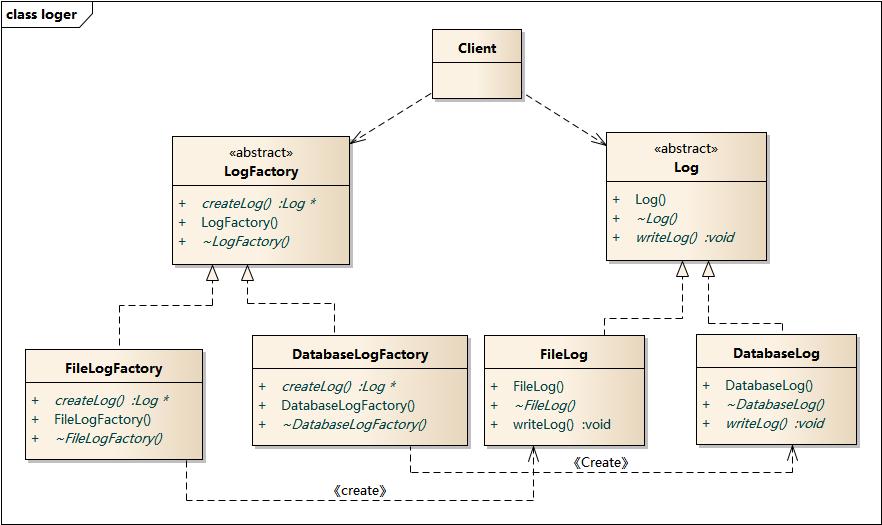

如果两个节点上的某个log entry的log index相同且term相同,那么在该index之前的所有log entry应该都是相同的。在没有异常的情况下,log matching是很容易满足的,但如果出现了node crash,情况就会变得复杂了。

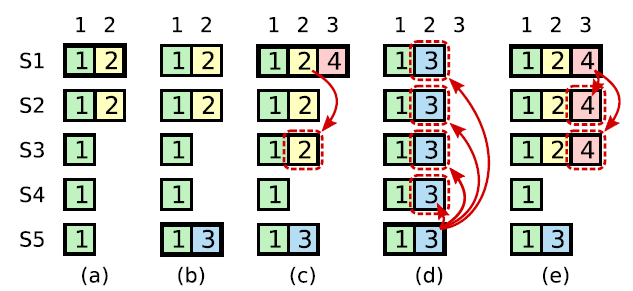

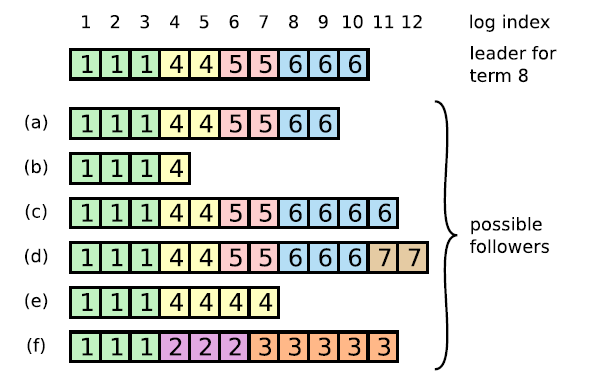

*** 上图的a-f不是6个follower,而是某个follower可能存在的六个状态 ***

- 比

leader日志少: a,b

- 比

leader日志多:c,d

- 某些位置比

leader多,某些日志比leader少:e,f

当出现了leader与follower不一致的情况,leader强制follower复制自己的log。

leader completeness

- 一个日志被复制到多数节点才算committed

- 一个节点得到多数的投票才能成为

leader,而节点A给节点B投票的其中一个前提是,B的日志不能比A的日志旧

State Machine Safety

如果节点将某一位置的log entry应用到了状态机,那么其他节点在同一位置不能应用不同的日志。简单点来说,所有节点在同一位置(index in log entries)应该应用同样的日志。

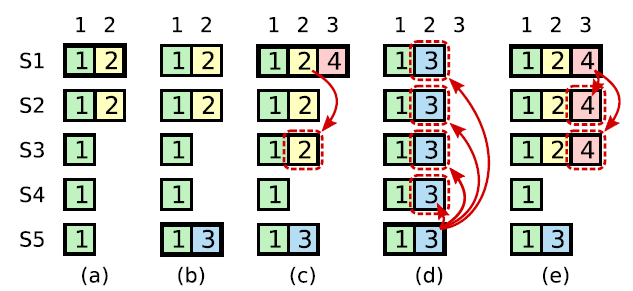

- (a)时刻, s1是leader,在term2提交的日志只赋值到了s1 s2两个节点就crash了。

- (b)时刻, s5成为了term 3的

leader,日志只赋值到了s5,然后crash。

- (c)时刻,s1又成为了term 4的

leader,开始赋值日志,于是把term2的日志复制到了s3,此刻,可以看出term2对应的日志已经被复制到了多数节点上,因此是committed,可以被状态机应用。

- (d)时刻,s1又crash了,s5重新当选,然后将term3的日志复制到所有节点,这就出现了一种奇怪的现象:被复制到大多数节点(或者说可能已经应用)的日志被回滚。

因为term4时的leader s1在(c)时刻提交了之前term2任期的日志。某个leader选举成功之后,不会直接提交前任leader时期的日志,而是通过提交当前任期的日志的时候“顺手”把之前的日志也提交了,具体怎么实现了,在log matching部分有详细介绍。那么问题来了,如果leader被选举后没有收到客户端的请求呢,论文中有提到,在任期开始的时候发立即尝试复制、提交一条空的log。

因此,在上图中,不会出现(C)时刻的情况,即term4任期的leader s1不会复制term2的日志到s3。而是如同(e)描述的情况,通过复制-提交term4的日志顺便提交term2的日志。如果term4的日志提交成功,那么term2的日志也一定提交成功,此时即使s1 crash,s5也不会重新当选。

参考&鸣谢